curl http://search.twitter.com/search.json?q=%23cats

“The Twitter REST API v1 is no longer active. Please migrate to API v1.1. https://dev.twitter.com/docs/api/1.1/overview”

curl http://search.twitter.com/search.json?q=%23cats

“The Twitter REST API v1 is no longer active. Please migrate to API v1.1. https://dev.twitter.com/docs/api/1.1/overview”

This method was used to fix the tweetcurl series of Max patches and anything else which uses Tom Igoe’s method of sending tweets from Arduino via cosm.

http://www.tigoe.com/pcomp/code/arduinowiring/1135/ (old method)

The automatic twitter trigger used in the “internet sensors” project via cosm, stopped working when cosm migrated to xively.com. But it works correctly from a device (or Max) to xively. So you don’t need to change anything except the xively trigger associated with the feed.

The fix is to go into your xively feed (legacy feed) delete the existing trigger and set up a new trigger using zapier – using the instructions in this tutorial.

https://xively.com/dev/tutorials/zapier/

Notes:

If you really want to send Tweets from Max, check out the Twitter client that uses ruby: https://reactivemusic.net/?p=7013

A series of projects that use Internet API’s for interactive media projects.

updated 2/14/2021.

Projects have been tested on Max8 and Mac OS Catalina – except where noted. Other dependencies are are listed on individual project pages.

My goal is to show a variety of methods to get data to and from Max. API’s come and go, as do the libraries that support them.

internet-sensors is on Github at: https://github.com/tkzic/internet-sensors

Each project is in a separate folder.

Some projects require passwords and API-keys from providers.

For example, for the ‘Twitter streaming API in Max’ project you’ll need to set up a Twitter application from your account to get authorization credentials.

For projects that need authorization usually you’ll just need to modify the patches/source code with your user information – as directed in the instructions. The API keys embedded in the code will not work unless specifically mentioned, like with the Google speech API.

API’s used in the projects change fairly often. So there’s no guarantee they’ll work. If you find problems or have ideas – please post to them to the github repository. Or email me at [email protected].

1. Twitter streaming API in Max (FM, php, curl, geocoding, [aka.speech], Soundflower (optional), Morse code, OSC, data recorder, Twitter v1.1 API, Twitter Apps, Oauth)

https://reactivemusic.net/?p=5786

2. Sending tweets from Max using curl ([sprintf], [aka.shell], xively.com API, zapier.com API, JSON, javascript Twitter v1.1 API, Oauth)

deprecated 2/11/2021 – old project link here: https://reactivemusic.net/?p=5447

New! – use the project above to send tweets from using a Fisher Price “Little Tikes” piano: https://reactivemusic.net/?p=6993

4. Speech to text in Max (Google speech API, JSON, javascript, sox, Twitter v1.1 API, Oauth)

Note: Send Tweets using speech as well.

https://reactivemusic.net/?p=4690

5. A conversation with a robot in Max (Google speech API, sox, JSON, pandorabots API, python, [aka.speech]

https://reactivemusic.net/?p=9834

7. Playing bird calls in Max (xeno-canto API, [jit.uldl], [jit.qt.movie])

https://reactivemusic.net/?p=4225

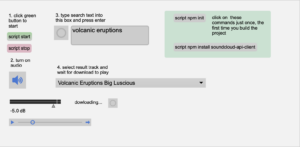

8. Soundcloud API in Max (node.js)

https://reactivemusic.net/?p=20120

9. Real time train map using Max and node.js (XML, JSON, OSC, data recorder, web sockets, Irish Rail API)

https://reactivemusic.net/?p=5477

10. stock market music in Max (OSC, netcat, php, mysql, html, javascript, Yahoo API, linux)

…updates in progress…

https://reactivemusic.net/?p=12029

11. Using weather forecast data to drive weather sounds in Pure Data (ruby, OSC, JSON, openweathermap API, “Designing Sound” by Andy Farnell)

https://reactivemusic.net/?p=5846

… updates in progress…

12. Using ping times to control oscilators in Max (Mashape ping-uin API, ruby, OSC, JSON)

https://reactivemusic.net/?p=5945

13. Spotify Segment analysis player – sonification of audio analysis data from Spotify (echo nest) API, node, Max/MSP)

https://reactivemusic.net/?p=20096

14. Quadcopter AR_drone – Fly a quadcopter using Max – with streaming Web video. ( node.js, AR_drone, Google Chrome, Osc, Max/MSP)

deprecated 2/14/2021 – old project link: https://reactivemusic.net/?p=6635

15. Adding markers to Google Maps in Max – ( node.js, ruby, Google Chrome, Osc, Max/MSP, websockets, Google Maps API, Jquery, javascript)

deprecated 2/14/2021 – old project link: https://reactivemusic.net/?p=11412

16. Max data recorder – Record and play back streams of data simultaneously at various rates

https://reactivemusic.net/?p=8053

17. MBTA bus data in Max – Sonification of Mass Ave buses, from Harvard to Dudley

… updates in progress…

https://reactivemusic.net/?p=17524

This is a ruby version of the Max tweetCurl5 patch (which tweets via xively.com) described here:

https://reactivemusic.net/?p=5447

In this version, the Max patch communicates via OSC to a background server running in ruby. An advantage of this method is that both the patch and the server are compact and easy to understand. The Max patch does things in a Max way. And likewise with the ruby script.

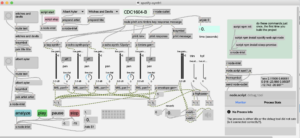

Here’s a screen shot of the Max patch:

Max

ruby

The ruby script requires installation of the following gems

For example:

# gem install patron

# ./ruby-max-tweet.rb

The files for this project can be downloaded from the intenet-sensors archive at github

World map and radio simulation

This program is broken on Mac OS Monterey. The PHP code is throwing errors in the OSC library. I’m not certain there is a reasonable workaround at this point and will be looking at replacing the php code with node.js or another more reliable platform. Also, as noted below – php is no longer installed in Mac os – so it requires homebrew or macports

Compare to satellite photo of earth – note the pattern of lights.

https://github.com/tkzic/internet-sensors

folder: twitter-stream

(note: starting with mac os monterey, php is no longer included in mac os. You can install it with homebrew. See this post: https://www.ergonis.com/products/tips/install-php-on-macos.php

[note] The project displays Tweets without these externals, but you won’t hear any speech

In addition to having a Twitter account, you will need to set up a Twitter application from the developer site here:

Good instructions on how to do this can be found in this stackoverflow.com post under this heading: So you want to use the Twitter v1.1 API?

When you get to step 5 – in the instructions – instead of writing your own code, just use a text editor to copy your access tokens into this php program which is provided:

Replace the strings in this line of code by copying and pasting the appropriate ones from your Twitter application:

$t->login('consumer_key', 'consumer secret', 'access token', 'access secret');

So it will end up looking something like this:

$t->login('ZdzfNaeflihFydfOHeOA', 'eXzUOfhif4riifgRbCTnnSN0T7neYtg8dIWDC7j3bs', '205589709-5kRI1fllJvU94jjffeerSn9LrTajtxSrvO8', 'u5MuSxPseBemUIBWlMxEFaw899feedXA0eHlReCnQ');

Yeah – its cryptic…

1. open the Max Patch: world3.maxpat

2. in a terminal window run the php program: ctwitter_max3.php. [note] it runs forever. Press <ctrl-c> when you want to stop streaming Tweets.

php ./ctwitter_max3.php

3. Switch back to world3.maxpat to see dots populating the map

4. In Max, press the speaker icon (lower left) to turn on audio.

5. Activate voice synth/morse code using the blue toggle (lower left)

6. Clear the map by pressing the blue message box: “clear, drawpict a 0 0”

7. Stop the Tweet stream by pressing <ctrl-c> in the terminal window

If you have Soundflower installed, the Mac OS speech synth output can be routed back to Max for audio processing. This is somewhat complicated, but shows how to process audio in Max from other sources.

The built-in data recorder/playback is on the left side of world3.maxpat:

<span style="font-family: 'Helvetica Neue', Helvetica, Helvetica, Arial, sans-serif; font-size: 23px; font-weight: bold; line-height: 1.1;"> </span>

<span style="font-family: 'Helvetica Neue', Helvetica, Helvetica, Arial, sans-serif; font-size: 23px; font-weight: bold; line-height: 1.1;">revision history</span>

<span style="font-family: 'Helvetica Neue', Helvetica, Helvetica, Arial, sans-serif; font-size: 23px; font-weight: bold; line-height: 1.1;"> </span>

Updates for Max8 and Catalina:

Replaced [aka.speech] external with Jeremy Bernstein’s [shell] external and the Mac OS command line ‘say’ command.

Reinstalled Java Development Kit for [mxj] object

I revised the php code for the Twitter streaming project, to use the coordinates of a corner of the city polygon bounding box. That seems to be more reliable than the geo coordinates which are absent from most Tweets.

Here’s a basic pattern:

From Max, there are many ways to do this:

by Gokce Kinayoglu

http://cycling74.com/toolbox/searchtweet-design-patches-that-respond-to-twitter-posts/

This patch demonstrates the Twitter search API. Its self contained within Max – using the [mxj search tweet] external. This object allows you to input

The response is:

This patch is a great way to get Tweets into Max. You can use a [metro] object to poll the API. There are no additional programs running outside of Max.

The limitation is lack of flexibility. You don’t have access to any of the other parameters in the request response. For example, geographic data. Also, it can be difficult to install and maintain java [mxj] programs in Max.

Here’s a screenshot of a patch which takes the output of the above patch and sends it to the aka.speech object – which runs the Mac Os built in text-to-speech program

A variation on the Twitter mood-lamp program.

note 6/2014 – this may not work due to changes in oauth

local files:

It grabs a ‘feed’ or any URL which returns a bunch of text. Then it does some analysis on the text, and using the results to send RGB data back to Max using OSC.

An example of using the twitter4j library in Processing to do a Twitter search and display the results on a world map.

local file is in: documents/processing/Processing_Twitter

More information about twitter4j in processing:

https://forum.processing.org/topic/json-quiry-twitter

https://forum.processing.org/topic/twitter-streaming

I’ve revised the php program that streams Tweets and sends them to Max, to remove hyperlinks, RT indicators, user mentions, and ascii art. Now it works better with text-to-speech.

things that could be done in a future project:

from https://dev.twitter.com/docs/streaming-apis

This diagram shows the process of handling a stream, using a data store as an intermediary.

The JSON response breaks out various components of the tweet like hashtags and URL’s but it doesn’t provide a clean version of the text – which for example could be converted to speech.

Here’s a sample response which shows all the fields:

{

"created_at": "Sat Feb 23 01:30:55 +0000 2013",

"id": 305127564538691584,

"id_str": "305127564538691584",

"text": "Window Seat #photo #cats http:\/\/t.co\/sf0fHWEX2S",

"source": "\u003ca href=\"http:\/\/www.echofon.com\/\" rel=\"nofollow\"\u003eEchofon\u003c\/a\u003e",

"truncated": false,

"in_reply_to_status_id": null,

"in_reply_to_status_id_str": null,

"in_reply_to_user_id": null,

"in_reply_to_user_id_str": null,

"in_reply_to_screen_name": null,

"user": {

"id": 19079184,

"id_str": "19079184",

"name": "theRobot Vegetable",

"screen_name": "roveg",

"location": "",

"url": "http:\/\/south-fork.org\/",

"description": "choose art, not life",

"protected": false,

"followers_count": 975,

"friends_count": 454,

"listed_count": 109,

"created_at": "Fri Jan 16 18:38:11 +0000 2009",

"favourites_count": 1018,

"utc_offset": -39600,

"time_zone": "International Date Line West",

"geo_enabled": false,

"verified": false,

"statuses_count": 10888,

"lang": "en",

"contributors_enabled": false,

"is_translator": false,

"profile_background_color": "1A1B1F",

"profile_background_image_url": "http:\/\/a0.twimg.com\/profile_background_images\/6824826\/BlogisattvasEtc.gif",

"profile_background_image_url_https": "https:\/\/si0.twimg.com\/profile_background_images\/6824826\/BlogisattvasEtc.gif",

"profile_background_tile": true,

"profile_image_url": "http:\/\/a0.twimg.com\/profile_images\/266371487\/1roveggreen_normal.gif",

"profile_image_url_https": "https:\/\/si0.twimg.com\/profile_images\/266371487\/1roveggreen_normal.gif",

"profile_link_color": "2FC2EF",

"profile_sidebar_border_color": "181A1E",

"profile_sidebar_fill_color": "252429",

"profile_text_color": "666666",

"profile_use_background_image": false,

"default_profile": false,

"default_profile_image": false,

"following": null,

"follow_request_sent": null,

"notifications": null

},

"geo": null,

"coordinates": null,

"place": null,

"contributors": null,

"retweet_count": 0,

"entities": {

"hashtags": [{

"text": "photo",

"indices": [12, 18]

}, {

"text": "cats",

"indices": [19, 24]

}],

"urls": [{

"url": "http:\/\/t.co\/sf0fHWEX2S",

"expanded_url": "http:\/\/middle-fork.org\/?p=186",

"display_url": "middle-fork.org\/?p=186",

"indices": [25, 47]

}],

"user_mentions": []

},

"favorited": false,

"retweeted": false,

"possibly_sensitive": false,

"filter_level": "medium"

}