Remote receiver project using Websdr as a remote alternative to a local receiver.

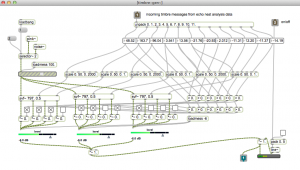

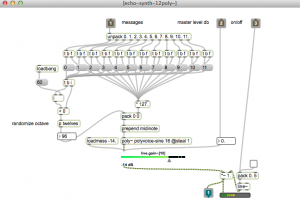

Demonstration of a Max/MSP program that connects an amateur radio transceiver to Websdr – transmitting locally from Maine (USA) while receiving remotely using a radio in the Netherlands. The Max program reads the frequency from a Elecraft K4 transceiver, to control the Websdr sites. It also loads the remote receivers, controls audio routing, mode, filter, and waterfall display settings. An iPad, running touchOSC, acts a a control panel. Up to 4 remote receivers operate at the same time. Websdr is a remarkable system, developed by PA3FWM at http://websdr.org/. It lets you control remote receivers worldwide, from your Web browser.

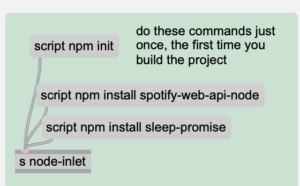

Components:

- Max/MSP

- Websdr

- TouchOSC

- Elecraft K4 transceiver with antenna system

- Skookumlogger (logging software)

Max Patches:

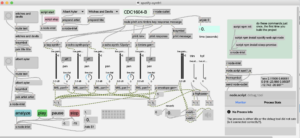

websdrjweb7.maxpat : main control program. Contains [jweb] objects for launching websdr instances. Also code for injecting javascript to control parameters like frequency, filter, and volume. This patch acts as an intermediary between TouchOSC, WebSDR, and allows external MIDI control as well as getting frequency input from CAT controlled radios like the Elecraft K4.

websdrCATaudio.maxpat : handles serial port interaction for the K4. Also reads audio stream from either the K4 receiver (via USB) or the websdr receiver (via Blackhole.) I created an aggregate audio device called K4sdr to allow Max to read both devices at the same time. Audio switching and levels are handled using a Korg nanoControl2. For example to switch between the audio streams or listen to both.

Optional: arduino-ptt-detect2.maxpat : reads serial data from an Arduino, connected to the amplifier keying line, to determine whether the radio is in transmit mode, so we can switch back to the local audio stream to eliminate latency of hearing your signal via websdr. See subsequent post about this setup…

TouchOSC

websdrCW3.touchOSC : controls all 4 websdr channels, ie,., volume, mute, filter, CW offset, filtershift, – Also handles window management, loading js code, zoom in/out websdr and selecting channel waterfall views or Max code views.

CW Offset

websdr doesn’t have a control for CW pitch offset. To sync the frequency of the K4, the websdr is run in LSB mode with a frequency offset equal to the CW pitch setting in the transceiver. eg., 450 Hz. This works for most of the websdr sites, but unfortunately some of the sdr’s are off-frequency. You can usually compensate by adjusting the CWfreqOffset for that channel (in Max or TouchOSC).

Setting the offset also requires shifting the filter so it is centered over the actual signal.

Files

This is currently a work in progress, not available on Github. Local files are in max teaching examples folder.