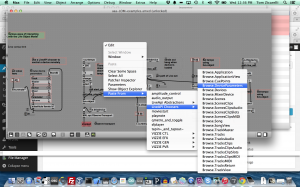

How to use the Max preset object inside of M4L.

There is some confusion about how to use Max presets in a M4L device. The method described here lets you save and recall presets with a device inside of a Live set, without additional files or dialog boxes. It uses pattrstorage. It works automatically with the Live UI objects.

It also works with other Max UI objects by connecting them to pattr objects.

Its based on an article by Gregory Taylor: https://cycling74.com/2011/05/19/max-for-live-tutorial-adding-pattr-presets-to-your-live-session/

Download

https://github.com/tkzic/max-for-live-projects

Folder: presets

Patch: aaa-preset3.amxd

How it works:

Instructions are included inside the patch. You will need to add objects and then set attributes for those objects in the inspector. For best results, set the inspector values after adding each object

Write the patch in this order:

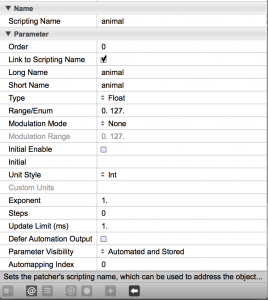

A1. Add UI objects.

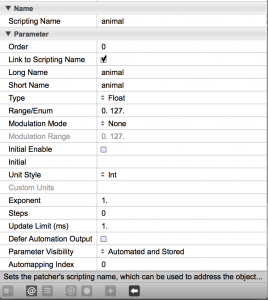

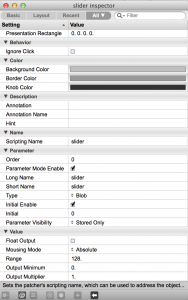

For each UI object:

- check link-to-scripting name

- set long and short names to actual name of param

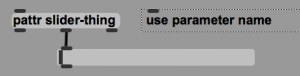

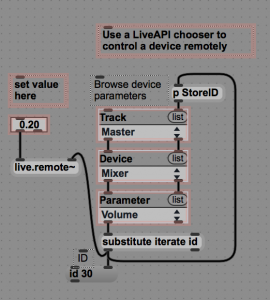

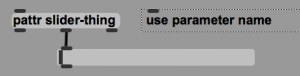

A2 (optional) Add non Live (ie., Max UI objects)

For each object, connect the middle outlet of a pattr object (with a parameter name as an argument) to the left inlet of the UI object. For example:

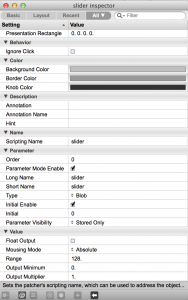

Then in inspector for each UI object:

- check parameter-mode-enable

- check inital-enable

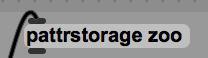

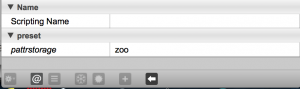

B. Add a pattrstorage object.

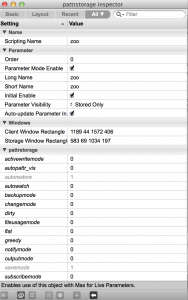

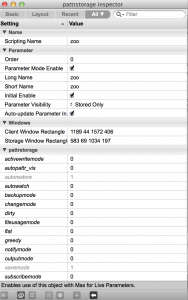

Give the object a name argument, for example: pattrstorage zoo. The name can be anything, its not important. Then in the inspector for pattrstorage:

- check parameter-mode enable

- check Auto-update-parameter Initial-value

- check initial-value

- change short-name to match long name

C. Add an autopattr object

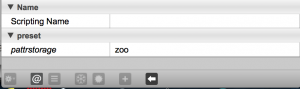

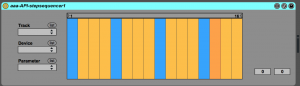

D. Add a preset object

In the inspector for the preset object:

- assign pattrstorage object name from step B. (zoo) to pattrstorage attribute

Notes

The preset numbers go from 1-n. They can be fed directly into the pattrstorage object – for example if you wanted to use an external controller

You can name the presets (slotnames). See the pattrstorage help file

You can interpolate between presets. See pattrstorage help file

Adding new UI objects after presets have been stored

If you add a new UI object to the patch after pattrstorage is set up, you will need to re-save the presets with the correct setting of the new UI object. Or you can edit the pattrstorage data.