overview

A series of projects that use Internet API’s for interactive media projects.

updated 2/14/2021.

Projects have been tested on Max8 and Mac OS Catalina – except where noted. Other dependencies are are listed on individual project pages.

My goal is to show a variety of methods to get data to and from Max. API’s come and go, as do the libraries that support them.

download

internet-sensors is on Github at: https://github.com/tkzic/internet-sensors

Each project is in a separate folder.

authorization

Some projects require passwords and API-keys from providers.

For example, for the ‘Twitter streaming API in Max’ project you’ll need to set up a Twitter application from your account to get authorization credentials.

For projects that need authorization usually you’ll just need to modify the patches/source code with your user information – as directed in the instructions. The API keys embedded in the code will not work unless specifically mentioned, like with the Google speech API.

help

API’s used in the projects change fairly often. So there’s no guarantee they’ll work. If you find problems or have ideas – please post to them to the github repository. Or email me at [email protected].

projects

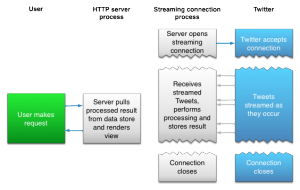

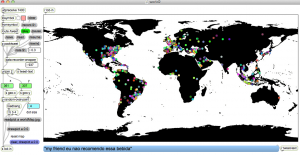

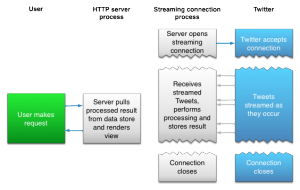

1. Twitter streaming API in Max (FM, php, curl, geocoding, [aka.speech], Soundflower (optional), Morse code, OSC, data recorder, Twitter v1.1 API, Twitter Apps, Oauth)

https://reactivemusic.net/?p=5786

2. Sending tweets from Max using curl ([sprintf], [aka.shell], xively.com API, zapier.com API, JSON, javascript Twitter v1.1 API, Oauth)

deprecated 2/11/2021 – old project link here: https://reactivemusic.net/?p=5447

3. Send and receive tweets in Max using ruby (ruby, API, JSON, javascript Twitter v1.1 API, OSC, Oauth)

New! – use the project above to send tweets from using a Fisher Price “Little Tikes” piano: https://reactivemusic.net/?p=6993

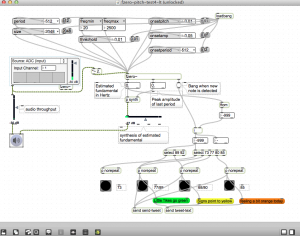

4. Speech to text in Max (Google speech API, JSON, javascript, sox, Twitter v1.1 API, Oauth)

Note: Send Tweets using speech as well.

https://reactivemusic.net/?p=4690

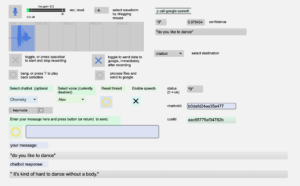

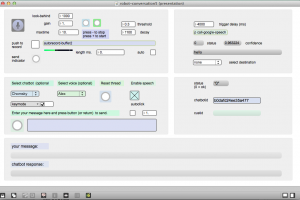

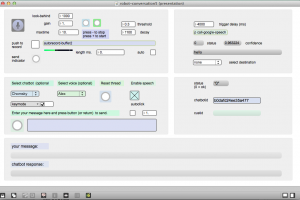

5. A conversation with a robot in Max (Google speech API, sox, JSON, pandorabots API, python, [aka.speech]

https://reactivemusic.net/?p=9834

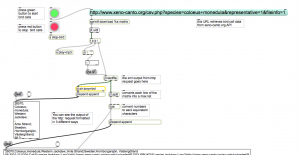

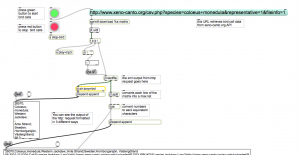

7. Playing bird calls in Max (xeno-canto API, [jit.uldl], [jit.qt.movie])

https://reactivemusic.net/?p=4225

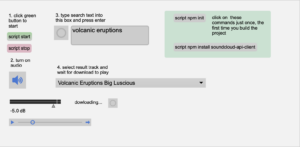

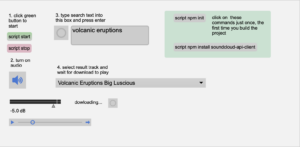

8. Soundcloud API in Max (node.js)

https://reactivemusic.net/?p=20120

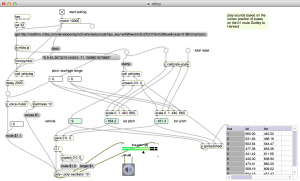

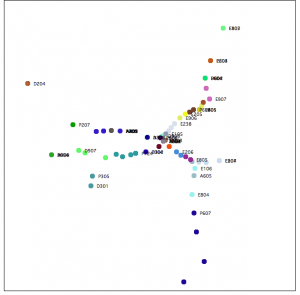

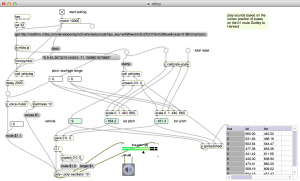

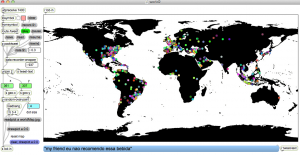

9. Real time train map using Max and node.js (XML, JSON, OSC, data recorder, web sockets, Irish Rail API)

https://reactivemusic.net/?p=5477

10. stock market music in Max (OSC, netcat, php, mysql, html, javascript, Yahoo API, linux)

…updates in progress…

https://reactivemusic.net/?p=12029

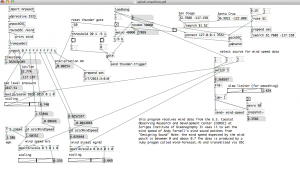

11. Using weather forecast data to drive weather sounds in Pure Data (ruby, OSC, JSON, openweathermap API, “Designing Sound” by Andy Farnell)

https://reactivemusic.net/?p=5846

… updates in progress…

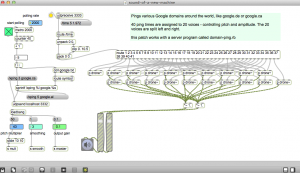

12. Using ping times to control oscilators in Max (Mashape ping-uin API, ruby, OSC, JSON)

https://reactivemusic.net/?p=5945

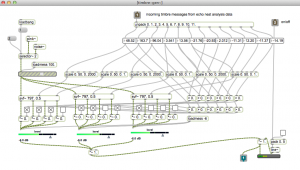

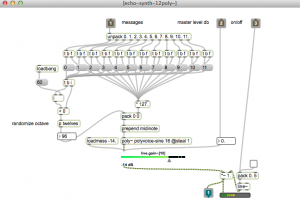

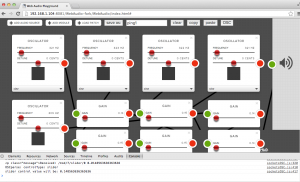

13. Spotify Segment analysis player – sonification of audio analysis data from Spotify (echo nest) API, node, Max/MSP)

https://reactivemusic.net/?p=20096

14. Quadcopter AR_drone – Fly a quadcopter using Max – with streaming Web video. ( node.js, AR_drone, Google Chrome, Osc, Max/MSP)

deprecated 2/14/2021 – old project link: https://reactivemusic.net/?p=6635

15. Adding markers to Google Maps in Max – ( node.js, ruby, Google Chrome, Osc, Max/MSP, websockets, Google Maps API, Jquery, javascript)

deprecated 2/14/2021 – old project link: https://reactivemusic.net/?p=11412

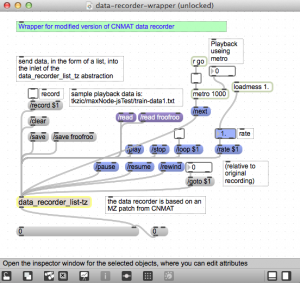

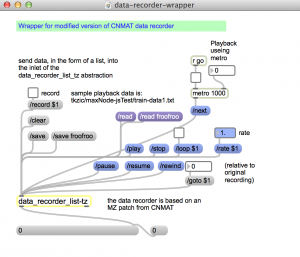

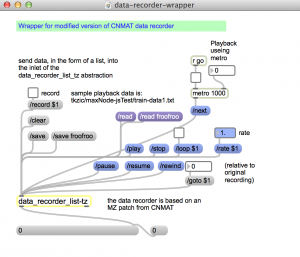

16. Max data recorder – Record and play back streams of data simultaneously at various rates

https://reactivemusic.net/?p=8053

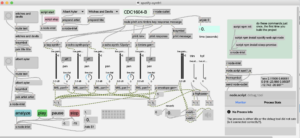

17. MBTA bus data in Max – Sonification of Mass Ave buses, from Harvard to Dudley

… updates in progress…

https://reactivemusic.net/?p=17524