Algorithmic composition and generative music – part 2

Reactive music

With reactive music, audio is the input. Music is the output. Music can also be the input.

from Wikipedia: http://en.wikipedia.org/wiki/RjDj

“Reactive music, a non-linear form of music that is able to react to the listener and his environment in real-time.[2] Reactive music is closely connected to generative music, interactive music, and augmented reality. Similar to music in video-games, that is changed by specific events happening in the game, reactive music is affected by events occurring in the real life of the listener. Reactive music adapts to a listener and his environment by using built in sensors (e.g. camera, microphone, accelerometer, touch-screen and GPS) in mobile media players. The main difference to generative music is that listeners are part of the creative process, co-creating the music with the composer. Reactive music is also able to augment and manipulate the listeners real-world auditory environment.[3]

What is distributed in reactive music is not the music itself, but software that generates the music…”

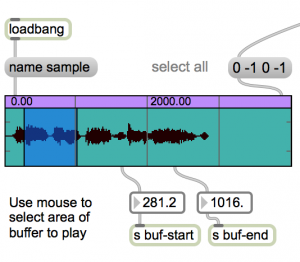

Ableton Live field recorder

Uses dummy clips to apply rhythmic envelopes and effects to ambient sound: https://reactivemusic.net/?p=2658

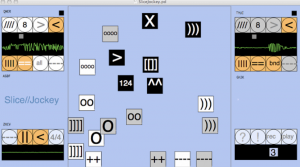

InstantDecomposer and Slice/Jockey

Making music from from sounds that are not music.

by Katja Vetter

InstantDecomposer is an update of Slice//Jockey. It has not been released publicly. Slice//Jockey runs on Mac OS, Windows, and Linux – including Raspberry-PI

http://www.katjaas.nl/slicejockey/slicejockey.html

Slice//Jockey help: https://reactivemusic.net/?p=19065

Slice//Jockey is written in Pd (PureData) – open source – the original Max.

By Miller Puckette

Local file reference

- local: InstantDecomposer version: tkzic/pdweekend2014/IDecTouch/IDecTouch.pd

- local: slicejockey2test2/slicejockey2test2.pd

Lyrebirds

A music factory.

By Christopher Lopez

RJDJ

- A company that mysteriously vanished? http://en.wikipedia.org/wiki/RjDj

- Some explanation? http://evolver.fm/2012/09/24/rip-rjdj-developer-pivots-retires-legendary-immersive-app/

Inception and The Dark Knight iOS apps:

As of iOS 8.2, Dark Knight crashes on load. Inception only works with “Reverie Dream” (lower left corner)

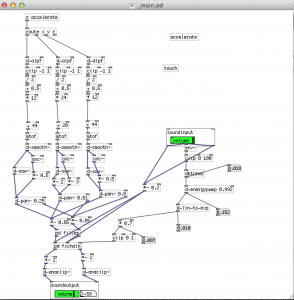

Running RJDJ scenes in Pd in Mac OS X

Though RJDJ is a lost relic in 2015. It still works in Pd. The example scenes used here are meant to run under libpd in iOS or Android, but they will actually work in Mac OS X.

First, use Pd Extended. Ok maybe you don’t need to.

1. Read the article from Makezine by Mike Dixon http://makezine.com/2008/11/03/howto-hacking-rjdj-with-p/

2. Download sample scenes from here: http://puredata.info/docs/workshops/MobileArtAndCodePdOnTheIPhone The link is under the heading “RJDJ Sources”

3. Download RJLIB from here: https://github.com/rjdj/rjlib

4. Add these folders in RJLIB to your Pd path (in preferences)

- pd

- rj

- deprecated

5. Now, try running the scene called “echelon” from the sample scenes you downloaded. It should be in the folder rjdj_scenes/Echelon.rj/_main.pd

- turn on audio

- turn up the sliders

- you should here a bunch of crazy feedback delay effects

Note: with Pd-extended 0.43-4 the error message: “base: no method for ‘float'” fills the console as soon as audio is turned on.

Scenes that I have got to work:

The ones with marked with a * seem to work well without need for modification or an external GUI. They all have error messages) – and they really are meant to work on Mobile devices, so a lot of the sensor input isn’t there.

- Amenshake (you will need to provide accelerometer data somehow)

- Atsuke (not sure how to control)

- CanOfBeats (requires accelerometer)

- ChordSphere (sounds cool even without accelerometer)

- Diving*

- DubSchlep* (interesting)

- Ehchelon*

- Eargasm*

- Echochamber*

- Echolon*

- Flosecond (requires accelerometer)

- FSCKYOU* (Warning, massive earsplitting feedback)

- Ghostwave* (Warning, massive earsplitting feedback)

- HeliumDemon (requires accelerometer)

- JingleMe*

- LoopRinger*

- Moogarina

- NobleChoir* (press the purple button and talk)

- Noia*

- RingMod*

- SpaceBox*

- SpaceStation (LoFi algorithmic synth)

- WorldQuantizer*

to be continued…

Random RJDJ links

Including stuff explained above.

- “RJDJ Addles me”, by Ann Althouse: http://youtu.be/_Ss52PH0oEM quite possibly the worst video ever

- The second worst video ever, by Jeangenie 1970: http://youtu.be/vWsc2bwRkI0

- rjlib: https://github.com/rjdj/rjlib – last update 5/2013

- PdParty https://github.com/danomatika/PdParty

- sample scenes? http://puredata.info/docs/workshops/MobileArtAndCodePdOnTheIPhone

- try echelon, eargasm, diving

- Makezine artlcle (links are outdated) http://makezine.com/2008/11/03/howto-hacking-rjdj-with-p/

- libpd http://libpd.cc/about/ community http://createdigitalnoise.com/categories/pd-everywhere

- RJDJ Tokyo user group: https://reactivemusic.net/?p=7585

Miscellaneous

- Shepard tones by Christopher Dobrian: https://reactivemusic.net/?p=17255

- Visual Shepard tones: https://reactivemusic.net/?p=17251

I’m thinking of something: http://imthinkingofsomething.com