By Louis Lazaris at Sitepoint

http://www.sitepoint.com/5-libraries-html5-audio-api/

- webaudioox.js : experimental

- howler.js : audio playback and sprites

- pedalboard.js : guitar pedal simulation

- wad : build synthesizers

- fifer : audio playback

By Louis Lazaris at Sitepoint

http://www.sitepoint.com/5-libraries-html5-audio-api/

A web audio example by Gaëtan Renaudeau, in jsfiddle. Click ‘result’ to play.

Notes, from: “Making Musical Apps with Csound using libpd and csoundapi~” at the 2nd International Csound Conference October 25th-27th, 2013, in Boston.

For about five months in 2013-2104 I worked as a programmer with Boulanger Labs to develop an app called Muse, using the Leap Motion sensor. Leap Motion is a controller that detects hand movement. Boulanger Labs is a a startup founded by Dr. Richard Boulanger “Dr. B” – to design music apps working with students in the Electronic Production and Design (EPD) department at Berklee College of Music.

Dr. B. was asked by a former student, Brian Transeau (BT), to help develop a music app in conjunction with Leap Motion. The goal was to have something in stores for Christmas – about 2 months from the time we started. BT would design the app and we would code it.

What would the app do? It would let you to improvise music in real time by moving your hands in the air. You would select notes from parallel horizontal grids of cubes – a melody note from the top, a chord from the middle, and a bass note from the bottom. It would be be beautiful and evolving like “Bloom” by Eno and Chilvers.

We bought Leap Motion sensors. We downloaded apps from the Airspace store to learn about the capabilities of the sensor.

One of our favorite apps is “Flocking”. It displays glowing flames to represent fingers. When you move your fingers it causes a school of fish to disperse.

We started to make prototypes in Max, using the aka.leapmotion external.

This was the first prototype and one of my favorites. It randomly plays Midi notes in proportion to how you move your fingers. It feels responsive.

Mac Os app: https://reactivemusic.net/?p=7434

Max code: https://reactivemusic.net/?p=11727

Local file: leapfinger3.app (in Applications)

Does it remind you of any of this?

“So this is an idea of the UI paralaxing. In the background it would be black with say stars. You could see your fingertips in this space and your hand movements would effect perspective changes in the UI. When you touch a cube it would light in 3D space radiating out (represented by the lens flares). This flare or light (like bloom) should continue in the direction you touched the cube. Instead of blocks, these would be grids *like 3D graph paper* subdivided into probably 12-24 cubes.”Best,_BT

Stephen Lamb joined the team as a C++ Open GL programmer, and began exploring the Leap Motion API in Cinder C++.

What kind of gestures can we get to work?

Darwin Grosse, of Cycling 74, sent us a new version of aka.leapmotion that handles predefined gestures, like swipes.

The next prototype was written, in CInder C++. An audio proof of concept. The FM oscillators and feedback delay are written at the sample level, using callbacks. The delay line code was borrowed from Julius O. Smith at CCRMA: https://reactivemusic.net/?p=7513

http://zerokidz.com/ideas/?p=8643

Delay line code: https://reactivemusic.net/?p=7513

Christopher Konopka, sound designer and programmer, joins the team, but won’t be able to work on the project until October.

At this point we are having doubts about the utility of the Leap Motion sensor for musical apps. Because it is camera-based, the positioning of hands is critical. There is no haptic feedback. We are experiencing high rates of false positives as well as untracked gestures.

http://zerokidz.com/ideas/?p=9448

http://zerokidz.com/ideas/?p=9485

Dr. B asks us to consider RJDJ style environmental effects.

This is when we find out that audio input doesn’t work in Cinder. After staying up until about 6 AM, I decide to run a test of libPd in openFrameworks C++. It works within minutes. libPd allows Pd to run inside of C++. By the way, libPd is the platform used by RJDJ.

Programming notes:

We can now write music using Pd, and graphics using OpenGL C++. This changes everything.

What about Csound? It also runs in Pd. Will it run in libPd? Dr. B introduces me to Victor Lazarrini – author of csoundapi~ and we figure out how to compile Csound into the project that evening.

Paul Batchelor joins the team. He is writing generative music in Csound for a senior project at Berklee. Paul and Christopher write a Csound/Pd prototype, in a couple of days – that will form the musical foundation of the app.

We build a prototype using Paul’s generative Csound music and connect it in to Leap Motion in openFrameworks.

Local file: (leapPdTest5ExampleDebug.app in applications)

In this next video, it feels like we are actually making music.

Note: local source code is ofx8 addons leapmotion : leapPdTest5 – but it probably won’t compile because we moved the libs into the proper folders later on

This was a week of madness. We had essentially three separate apps that needed to be joined: Steve’s Open GL prototype, my libPd prototype, and Paul’s Csound code. So every time Steve changed the graphics – or Paul modified the Csound code – I needed to re-construct the project.

Finally we were able to upload a single branch of the code to Github.

Steven Yi, programmer and Csound author, helped repair the xCode linking process. We wanted to be able to install the App without asking users to install Csound or Pd. Steven Yi figures out how to do this in a few hours…

Later that day, for various reasons Steve Lamb leaves the project.

I take over the graphics coding – even through I don’t know OpenGL. BT is justifiably getting impatient. I am exhausted.

Jonathan Heppner, author of AudioGL, joins the team. Jonathan will redo the graphics and essentially take over the design and development of the app in collaboration with Dr. B.

There is an amazing set of conference calls between Leap Motion, BT, Dr.B, and the development team. Leap Motion gives us several design prototypes – to simplify the UI. Dr. B basically rules them out, and we end up going with a Rubik’s cube design suggested by Jonathan. At one point BT gives a classic explanation of isorhythmic overlapping drum loops.

While Jonathan is getting started with the new UI, We forked a version, to allow us to refine the Osc messaging in Pd.

Christopher develops an extensive control structure in Pd that integrates the OpenGL UI with the backend Csound engine.

Christopher and Paul design a series of sample sets, drawing from nature sounds, samples from BT, Csound effects, and organically generated Csound motif’s. The samples for each set need to be pitched and mastered so they will be compatible with each other.

At this point we move steadily forward – there were no more prototypes, except for experiments, like this one: http://zerokidz.com/ideas/?p=9135 (that did not go over well with the rest of the team :-))

Tom Shani, graphic designer, and Chelsea Southard, interactive media artist, join the team. Tom designs a Web page, screen layouts, logos and icons. Chelsea provides valuable user experience and user interface testing as well as producing video tutorials.

Also, due to NDA’s, development details from this point on are confidential.

We miss the Christmas deadline.

That brings us up to the NAMM show where BT and Dr. B produce a promotional video and use the App for TV and movie soundtrack cues.

http://zerokidz.com/ideas/?p=9615

There are more than a few loose ends. The documentation and how-to videos have yet to be completed. There are design and usability issues remaining with the UI.

This has been one of the most exhausting and difficult development projects I have worked on. The pace was accelerated by a series of deadlines. None of the deadlines have been met – but we’re all still hanging in there, somehow. The development process has been chaotic – with flurries of last minute design changes and experiments preceding each of the deadlines. We are all wondering how Dr. B gets by without sleep?

I only hope we can work through the remaining details. The app now makes beautiful sounds and is amazingly robust for its complexity. I think that with simplification of the UI, it will evolve into a cool musical instrument.

We scaled back features and added a few new ones including a control panel, a Midi controller interface, a new percussion engine, and sample transposition tweaks. With amazing effort from Christopher, Jonathan, Paul, Chelsea, Tom S., and Dr. B – the app is completed and released!

https://airspace.leapmotion.com/apps/muse/osx

But why did it get into the Daily Mail? http://www.dailymail.co.uk/sciencetech/article-2596906/Turn-Mac-MUSICAL-INSTRUMENT-App-USB-device-lets-use-hand-gestures-make-sounds.html

Sencha Touch 2.x has an SDK

Getting started: http://docs.sencha.com/touch/2.3.1/#!/guide/getting_started

Local folders

Sencha Fiddle (for online testing) https://fiddle.sencha.com

Version 1.1 update to Blob now available in the iOS App store.

http://zerokidz.com/blob/home.html

Blob (by Bjorn Lindberg) was converted in 2011 to run as a webApp using SenchaTouch 1.0 and Phonegap 0.94. Both of these frameworks have evolved to the point that the original code is no longer workable.

Here is an update to the Web app version: http://zerokidz.com/blob1.1/www/sencha-index.html

bugs

Like SecretSpot, The native app runs only in portrait orientation.

notes

After this update has been submitted to Apple, I will try to convert it to run in current versions of Sencha Touch and Phonegap. There is relatively small amount of Sencha code in the app: A main window, toolbar, canvas window, and a few customized buttons.

Version 1.1 update to SecretSpot now available in the iOS app store.

http://zerokidz.com/secretspot/Home.html

SecretSpot was written in 2011 as a webApp using SenchaTouch 1.0 and Phonegap 0.94. Both of these frameworks have evolved to the point that the original code is no longer workable. With iOS 6, the app version of secretSpot broke. Audio was only triggering with every other touch.

Instead of upgrading javascript code, I made a few changes to the phonegap library so it would compile in iOS 7, then added new icons and splash screen files – and resubmitted the app.

One change was to alter the way that launch images get loaded during webView initialization, to prevent annoying white flashes. This version also does an extra load of the launch image – instead of just going to a black background while the webView is loading. If this ends up being too slow, then it may be better to go directly black background following initial launch image. See this post: http://stackoverflow.com/questions/2531621/iphone-uiwebview-inital-white-view

The native app runs only in portrait orientation. This is something the app has had problems with before. Something has changed in the process of detecting current orientation. Its likely to be a Phonegap issue as the Web app version works fine.

I may have a look at this bug – and apply updates to the Blob app as well. But the real solution is to upgrade to current versions of the frameworks.

This is more a documentation thing: If you don’t touch the canvas when the app starts, then there will be no sound unless you restart the app.

“OpenBTS is a Unix application that uses a software radio to present a GSM air interface to standard 2G GSM handset and uses a SIP softswitch or PBX to connect calls.”

Transmit AM audio on 727 KHz. using a voltage divider and an Arduino.

By Markus Gritsch

Note: with the actual circuit the signal is closer to 760 KHz.

Samples incoming audio at 34 KHz. and rebroadcasts as RF using the Arduino clock.

This is a simplification of Markus’ original circuit. It eliminates the tuned output circuit. Probably at the expense of increased harmonic distortion.

The voltage divider is uses 2 47K Ohm resistors and a 1 uF electrolytic capacitor. It is the input half of the original circuit.

http://dangerousprototypes.com/forum/viewtopic.php?f=56&t=2892#p28410

(I substituted 2 100 K Ohm resistors in parallel for each of the 47 K’s.)

A voltage divider is useful as a general purpose coupler, for sampling analog signals using Arduino PWM input.

The Arduino sketch is Markus’ original – reprinted here:

(note) Local file is AM_audio_transmitter.

// Simple AM Radio Signal Generator :: Markus Gritsch

// http://www.youtube.com/watch?v=y1EKyQrFJ−o

//

// /|\ +5V ANT

// | \ | /

// | −−−−−−−−−−−−−−−− \|/

// | | R1 | Arduino 16 MHz | C2 |

// | | 47k | | || | about

// audio C1 | | | TIMER_PIN >−−−−−||−−−−−+ 40Vpp

// input || | | | || |

// o−−−−−||−−−−−+−−−−−−−> INPUT_PIN | 1nF |

// +|| | | | )

// 1uF | | R2 | ATmega328P | ) L1

// | | 47k −−−−−−−−−−−−−−−− fres = ) 47uH

// fg < 7 Hz | | 734 kHz )

// | |

// | |

// −−− GND −−− GND

//

// fg = 1 / ( 2 * pi * ( R1 || R2 ) * C1 ) < 7 Hz

// fres = 1 / ( 2 * pi * sqrt( L1 * C2 ) ) = 734 kHz

#define INPUT_PIN 0 // ADC input pin

#define TIMER_PIN 3 // PWM output pin, OC2B (PD3)

#define DEBUG_PIN 2 // to measure the sampling frequency

#define LED_PIN 13 // displays input overdrive

#define SHIFT_BY 3 // 2 ... 7 input attenuator

#define TIMER_TOP 20 // determines the carrier frequency

#define A_MAX TIMER_TOP / 4

void setup() {

pinMode( DEBUG_PIN, OUTPUT );

pinMode( TIMER_PIN, OUTPUT );

pinMode( LED_PIN, OUTPUT );

// set ADC prescaler to 16 to decrease conversion time (0b100)

ADCSRA = ( ADCSRA | _BV( ADPS2 ) ) & ~( _BV( ADPS1 ) | _BV( ADPS0 ) );

// non−inverting; fast PWM with TOP; no prescaling

TCCR2A = 0b10100011; // COM2A1 COM2A0 COM2B1 COM2B0 − − WGM21 WGM20

TCCR2B = 0b00001001; // FOC2A FOC2B − − WGM22 CS22 CS21 CS20

// 16E6 / ( OCR2A + 1 ) = 762 kHz @ TIMER_TOP = 20

OCR2A = TIMER_TOP; // = 727 kHz @ TIMER_TOP = 21

OCR2B = TIMER_TOP / 2; // maximum carrier amplitude at 50% duty cycle

}

void loop() {

// about 34 kHz sampling frequency

digitalWrite( DEBUG_PIN, HIGH );

int8_t value = (analogRead( INPUT_PIN ) >> SHIFT_BY ) - (1 << (9 - SHIFT_BY ));

digitalWrite( DEBUG_PIN, LOW );

// clipping

if( value < -A_MAX) {

value = -A_MAX;

digitalWrite( LED_PIN, HIGH );

} else if ( value > A_MAX ) {

value = A_MAX;

digitalWrite( LED_PIN, HIGH );

} else {

digitalWrite( LED_PIN, LOW );

}

OCR2B = A_MAX + value;

}

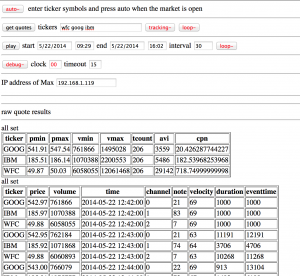

Make music from the motion of stock prices.

This program gathers stock prices into a database. It generates Midi data – mapping price to pitch, and mapping trading volume to velocity and rhythmic density. It uses ancient Web technology: HTML/javascript front-end with a php back-end accessing a mysql database.

Case study: http://zproject.wikispaces.com/stock+market+music

To run this project, you will need a server (preferably linux) with the following capabilities:

All of this is pretty standard – so I won’t talk about it here. I am running it on Ubuntu Linux. There are many other ways to get the project working, by using the layout described here.

https://github.com/tkzic/internet-sensors

folder: stock-market

stock_market_music.maxpat

The selectstock3.php program harvests stock quote data and stores it in a mysql database.

The database name is: stocks – table is: quotes

Table structure:

The table is basic flat representation of a stock quote, indexed by the ticker symbol. It contains price, volume, high/low/change, timestamp, etc., For our purposes, the price, volume and timestamp are essentially all we need.

SQL to create the table:

CREATE TABLE `stocks`.`quotes` ( `ticker` varchar( 12 ) NOT NULL , `price` decimal( 10, 2 ) NOT NULL , `qtime` datetime NOT NULL , `pchange` decimal( 10, 2 ) NOT NULL , `popen` decimal( 10, 2 ) NOT NULL , `phigh` decimal( 10, 2 ) NOT NULL , `plow` decimal( 10, 2 ) NOT NULL , `volume` int( 11 ) NOT NULL , `ttime` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP , `id` int( 11 ) NOT NULL AUTO_INCREMENT , `spare` varchar( 30 ) DEFAULT NULL , UNIQUE KEY `id` ( `id` ) , KEY `ticker` ( `ticker` ) ) ENGINE = MyISAM DEFAULT CHARSET = latin1 COMMENT = 'stock quote transactions';

The webpage control program allows you to select stocks by ticker symbol, and get either one quote or get quotes at regular time interval. Each quote is inserted into the stock table for later retrieval and analysis.

The web front end is quirky so I will describe it in terms of how you might typically use it:

To look at historical trends, you would need access to historical stock data. To use it as a tool for short term analysis, you would need access to real-time quote data in an API. At the time, both of these cost money.

However, it doesn’t cost money to get recent quotes from Yahoo throughout the day and store them in a database – so that’s the approach I took.

If I were to do this project today, I’d look for a free online source of historical data, in machine-readable form – because the historical data provides the most interesting and organic sounds when converted into music. The instant high speeding trading data would probably make interesting sounds as well, but you still need to pay for the data.