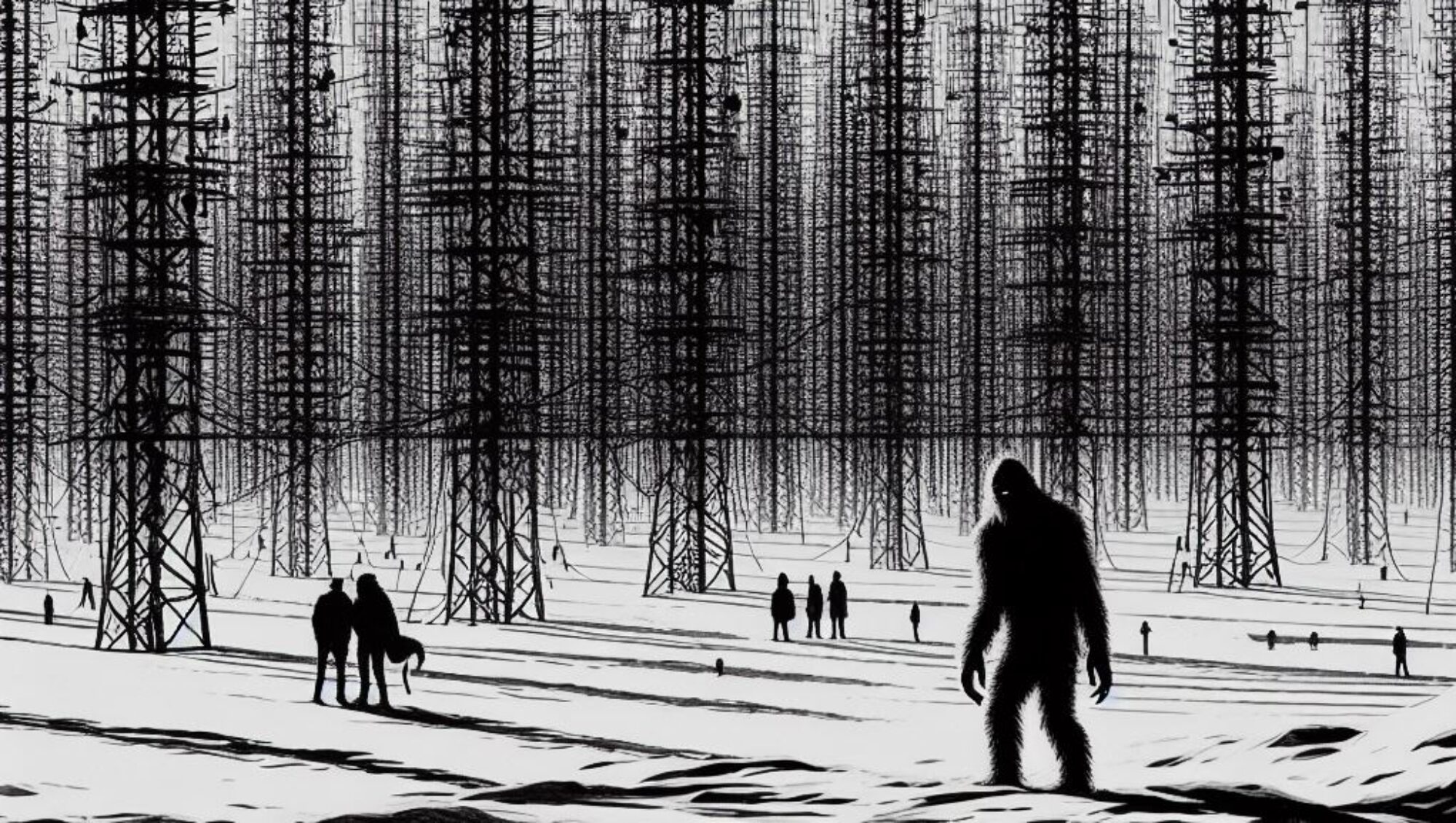

Case study: Adapting and transforming an interactive video performance.

under construction

Video and programming by Naoto Fushimi

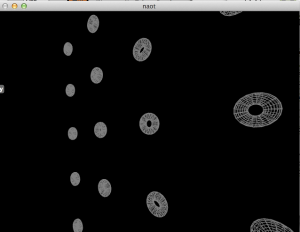

Here is a case study of how you might approach an interactive video project. For example, if you had an opportunity to design a realtime visualization tool for a band.

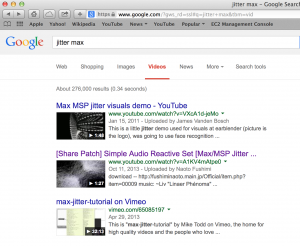

Search

Knowing that cool video things can be designed with Max/MSP/Jitter, I opened up Google web search and typed in: jitter max

Here are the results:

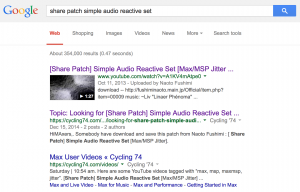

The second entry looked interesting: [Share Patch] Simple Audio Reactive Set [Max/MSP Jitter]. (It is the cool video at the top of this post). There is a link to the Max patch in the video description – but the link is broken. So I entered the title of the video into Google: Share Patch Simple Audio Reactive Set

Here are the results:

The second entry is a link to the Cycling 74 forum. https://cycling74.com/forums/topic/looking-for-share-patch-simple-audio-reactive-set-maxmsp-jitter/ Here I was able to find a link to a modified version of Naoto Fushimi’s Max patch – in a post by Giorgio. http://1cyjknyddcx62agyb002-c74projects.s3.amazonaws.com/files/2014/12/NAOTO.maxpat

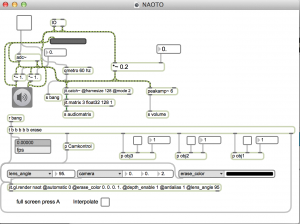

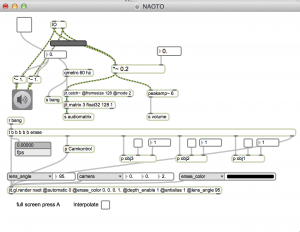

Understanding the Max patch

It appears that audio input is supposed to trigger the interactive graphics. But immediately on starting the audio, it begins to feedback, and there is apparently no way to stop it. I notice there is an ezadc~ object as well as an IO abstraction – but can’t quite see how they are hooked up due to the maze of segmented overlapping patch cords.

By selecting everything (<cmd> a) you can restore normal patch cords by selecting Arrange | remove all segments. After doing this, it appears that the ezadc~ is redundant, so I deleted it. Now the patch looks like this:

Much easier to understand. In the IO abstraction I loaded an audio file and then was able to get graphic output by toggling on the metro at the top of the patch and using the 3 toggles in the center of the patch.

It works, but how?

peakamp~

jit.catch~

3 openGL subpatches

jit.gl.gridshape

rotatexyz message