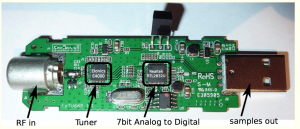

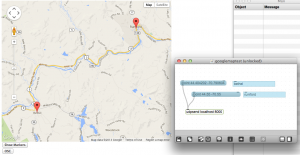

An example of sending data through the air, from one computer to another, using sound. The carrier frequency – 18Khz – just below ultrasound, is inaudible to most humans. The data protocol is Audio Frequency Shift Keying (AFSK) at 45 bits/second. It uses two tones – to encode 1’s and 0’s. This protocol was developed for radio teletype (rtty).

One computer sends the word “hello” to the other computer every 10 seconds.

The idea came from a paper by Michael Hanspach and Michael Goetz – “On Covert Acoustical Mesh Networks in Air” – http://www.jocm.us/uploadfile/2013/1125/20131125103803901.pdf. In the paper they explain that the concept was borrowed from current technology for underwater data networks. They warn of the vulnerability of “air-gapping” as a method of computer security.

Actually, after watching the video I realized its difficult to make exciting videos that feature sounds you can’t hear. Especially when you are whispering. Well, the pro camera crew should be knocking on the door at any moment.

download

https://github.com/tkzic/max-projects

folder: frequency-shift-keying

patches:

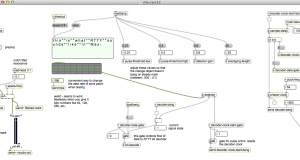

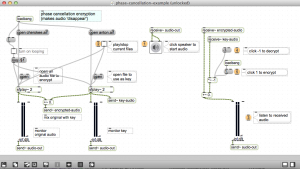

- rtty-recv12.maxpat

- rtty-send12.maxpat

- rtty-encode10.js

- rtty-recv9.js

Notes:

To run patches:

- in rtty-send12 set carrier frequency to something like 200

- then toggle start/stop

- You may want to increase the speed to 32

- On the receive side, clear the text box

- Make sure to set audio as described below

In audio settings try

- io vector = 512,

- signal vector = 32,

- overdrive and audio-interrupt should be enabled,

- SR=96 Khz.

Interesting discovery – or maybe a coincidence. Bit rates which are powers of 2 seem to work way better than arbitrary speeds. I wonder if its because the signal vector size is also a power of 2?

Going from a direct audio connection to an through-the-air connection led to a number of issues with filtering and and levels. The latest version of the Max patches have been organized into modules, like: encoder, transmitter, decoder, phasor-clock, etc., But they would benefit from some encapsulation.

It was interesting that throughput was better above 14Khz. Possibly due to less interference from environmental sounds – and less critical filtering. During the video I was able to talk (whisper), without interfering with data transfer. But if I squeaked a chair or tapped the desk, it would screw-up. Also, the builtin mic/speaker on MacBooks have response curves that are all over the place.

The next version will have sharper filters and an automatic level control (compressor). There’s difficult interaction in the detection process between filtering and timing. Up to this point I’ve been reluctant to use frequency domain filtering due to loss of timing resolution. Latency is ok though. But the other thing is that we don’t want filters which soften or distort the shapes of the pulses.

So one question is how high can you go – with the built-in mic and speaker. They are not rated above 20 Khz. but you never know?