Proof of concept

The next step will be to clean up the external so it allows mode, frequency, gain setting – and doesn’t break.

Proof of concept

The next step will be to clean up the external so it allows mode, frequency, gain setting – and doesn’t break.

Notes on compiling rtl_sdr in Mac OS – writing Max and Pd Externals.

update 3/31/2014

Today I got the Pd external running – using essentially same source code as Max. There is occasional weirdness going on with audio clicks when starting/stopping the radio, but other than that it seems fine and it runs. wooHOO. More to follow…

update 3/28/2014

Now have set up a skeleton for Pd, called rtlfmz~ (inside the Pd application bundle) which does absolutely nothing but compiles all of the project files and calls a function in rtl_fm. Next step will be to port the actual Max external code and do the conversion.

updates 3/38/2014 before working on Pd version

There is now a fairly solid max external (rtlfmz~) using a recent version for rtl_fm. Also there is a simple Makefile that compiles local version of rtl_fm3.c in:

tkzic/rtl-sdr-new/rtl-sdr/rtl-fm3

There are very minor changes to rtl_fm.c (for includes) and also a local version of librtlsdr.a (librtlsdr32.a) that is 32 bit.

The current state of the Max external does both pre-demodulated and raw IQ output, but you can only run one copy of the object due to excess use of global variables and my uncertainty over how to run multiple devices, threading, etc., – but we’ll go with it an try porting to Pd now.

update 2/27 – converted to using new version of rtl_fm

I had been using an older version of rtl_fm –

renamed external to rtlfmz~ and now using new version as (rtl_fm3.c) in the project

In addition to recopying the librtlsdr.a – I also recopied all of the include files and added two new files

convenience.c convenience.h

There is a different method of threading and reading which I haven’t looked at yet, but it is now doing what it did before inside Max, which is read FM for a few seconds and write audio data to a file

plan: Set up a circular buffer accessible to the output thread and to the max perform function. – then see if we can get it to run for a few seconds.

The main thing to think about is how to let the processing happen in another thread while returning control to max. Then there really should be a way to interrupt processing from max.

There needs to be a max instance variable that tells whether the radio is running or not. Then, when its time to stop – you just do all the cleanup stuff that is at the end of the rtl_fm main() function.

update 2/18/2014 – Max external

Now have rtl_fm function within the plussztz~ external

It detects, opens device, demodulates about 30 seconds of FM, and writes audio at 44.1kHz. to a file /tmp/radio.bin – which can be played by the play command

Changes to code included:

update 2/15/2014 – Max external

Have now successfully compiled a test external in Max 6.1.4 – name is plussztz~ and it includes the rtl_fm code. Made 2 changes so far:

original post

Today I was able to write a simple makefile to compile the rtl_fm app using the libusb and librtlsdr dynamic libraries.

Pd requires i386 architecture for externals (i386) so I then compiled the app using static libraries and the i386 architecture.

libusb-1.0 already had a 32 bit version in /usr/local/lib/libusb32-1.0.a (note that this version also requires compiling these frameworks:

-framework foundation -framework iokit

For librtlsdr, I rebuilt, using cmake with the following flags:

cmake ../ -DCMAKE_OSX_ARCHITECTURES=i386 -DINSTALL_UDEV_RULES=ON

But did not install the result. See this link for details on building with cmake: http://sdr.osmocom.org/trac/wiki/rtl-sdr

This produced a 32 bit static version of librtlsdr.a that could be used for building the app.

See this Stack Overflow post for more on cmake and architectures: http://stackoverflow.com/questions/5334095/cmake-multiarchitecture-compilation

local files:

currently local version of this test is in: tkzic/rtl-sdr-tz/rtl_fm2/

The default makefile builds the 32 bit architecture.

Next:

Using Google speech API and Pandorabots API

(updated 1/21/2024)

all of these changes are local – for now.

replace path to sox with /opt/homebrew/bin/sox in [p call-google-speech]

Also had to write a new python script to convert xml to json. Its in the subfolder /xml2json/xml4json.py

The program came from this link: https://www.geeksforgeeks.org/python-xml-to-json/

Also inside [p call-pandorabots] the path for this python program had to be explicit to the full path on the computer. this will vary depending on your python installation.

Also, note that you must install a dependency with pip:

pip install xmltodict

After all that I was actually able to have a conversation. These bots seem primitive, but loveable, now compared to chatGPT. Guess its time for a new project.

Also the voice selection for speech synth is still not connected

(updated 1/21/2021)

This project is an extension to the speech-to-text project: https://reactivemusic.net/?p=4690 You might want to try running that project first to get the Google speech API running.

sox: sox audio conversion program must be in the computer’s executable file path, ie., /usr/bin – or you can rewrite the [sprintf] input to [aka.shell] with the actual path. In our case we installed sox using Macports. The executable path is /opt/local/bin/sox – which is built into a message object in the subpatcher [call-google-speech]

get sox from: http://sox.sourceforge.net

Need to fix the selection of voices.

Also please see these notes about how to modify the patch with your key – until this gets resolved: https://reactivemusic.net/?p=11035

Resonate: exploring possibilities with sounds and spaces

By Mark Durham at “Sound Design with Max”

http://sounddesignwithmax.blogspot.com/2014/01/creative-convolution-part-1-resonate.html

update 6/2014 – Now part of the Internet sensors projects: https://reactivemusic.net/?p=5859

original post

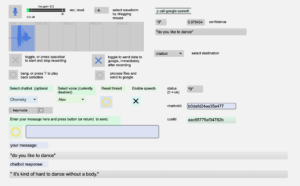

They can talk with each other… sort of.

Last spring I made a project that lets you talk with chatbots using speech recognition and synthesis. https://reactivemusic.net/?p=4710.

Yesterday I managed to get two instances of this program, running on two computers, using two chatbots, to talk with each other, through the air. Technical issues remain (see below). But there were moments of real interaction.

In the original project, a human pressed button in Max to start and stop recording speech. This has been automated. The program detects and records speech, using audio level sensing. The auto-recording sensor turns on a switch when the level hits a threshold, and turns off after a period of silence. Threshold level and duration of silence can be adjusted by the user. There is also a feedback gate that shuts off auto-record while the computer is converting speech to text, and ‘speaking’ a reply.

A Max 4.6 patch that uses Pluggo to create random effect matrixes with random parameters and various routing options.

plugv4r6.pat is the patch that works

(in progress)

– not available yet

startup

Choose a data file from the menu in this panel

The data files contain patches – not Max patches, but banks of fx patches that define a configuration of fx saved and named by the user. I haven’t figured out just what is what yet. Select a patch and press the green button. If it worked you will see the patch name change in this text box:

If it doesn’t work, the drop down menu in this box will probably read ‘nothing’

To select a patch, use the drop down menu box, or the number box to the left to make a selection. Then press the green reload button just to the left… (the purple button is for saving the current patch)

After pressing the green button – you should see the fx rack modules reloading from top to bottom – they will turn yellow when loading – and you may see the Pluggo control panel appear.

note: you may need to load a patch twice – there is a bug in the sequence of events for reloading parameters

Channel randomization: There are 4 channels 0-3 which correspond to the individual fx in the rack, starting at the top. The number box selects which channel to randomize.

Global randomization: Randomize all channels

There are various randomization modes that you choose with the message boxes:

Enter the name of an xml file to save the new bank of patches to, and press the red button.

Note: the patch (xml) files are getting modified by the patch, even when they aren’t explicitly saved. Why is this?

Signals can be routed through the effects matrix in a variety of ways using the matrix control object. The radio buttons on the left side of the matrix control select the most common presets

The vertical lines represent inputs in the following order:

The green indicator to the right of the channel meter indicates that the plugin is a Midi device

Midi devices receive Midi input and will block audio input in a serial routing. To bypass any plugin, click the red button to the left of the channel meter:

The green button reloads the plugin with the default preset. The brown button does nothing.

The mixer has 3 sets of stereo controls. From left to right, they are input, wet signal, dry signal. The radio buttons to the right of the sliders allow you to select the current channel – which will bring the plugin control panel for that channel into the foreground.

The 2 drop down menus to the right of the radio buttons select the midi input devices.

The top menu selects the midi controller device. (bcr-2000)

The bottom menu selects the midi note input and performance device.

The letter assignments can be set in the Max midi-setup configuration.

keyboard shortcuts

global randomization params

IO matrix

This music was produced using a Max patch and an electric piano.

More about the Max programming: http://zproject.wikispaces.com/pluggoproject

Pluggo, running in Max 4.6, on a Macbook, inside a VirtualBox instance of Windows XP.

to be continued…

Notes:

update 1/26/2014 – audio input and Max search path

For audio input to work in a windows XP virtual box inside of Mac OS, the sample rate of the microphone in Mac OS (utilities/audio midi setup) must be set to 441000. I spent hours trying to figure this out. Then found this post: https://forums.virtualbox.org/viewtopic.php?f=8&t=56628

The strangest thing is that if you activate audio input in Max without setting the above sample rate, you will get no audio output either.

Also, note that switching default sound cards in the host OS can cause the sample rates to reset back to 96 kHz – requiring them to be reset again before using VirtualBox.

The second issue was that the [vst~] object wasn’t finding names passed with the plug message. Turned out to be a simple matter of setting the path to the plugin directory in the Max file preferences.

Almost forgot – I set a shared drive to be on the E: drive – which was the original location of the plug go project directory – this eliminated need for updates in the patch.

The Pluggo authorization worked.

I was able to use the Behringer UCA202 (audio device) just by plugging it in. Although I couldn’t use any sound cards that required drivers.

http://www.amazon.com/Behringer-Latency-U-Control-UCA202-Interface/dp/B000J0IIEQ

Note: I am running plugv4r6 (the version from 2006)

original post

Instructions for installing Windows XP to run max 4.6 in VirtualBox on mac OS 10.8

For Midi devices:

Actually in this context, the word ‘piano’ is way too generous. This is a prototype from October, that uses screen mapping to separate left and right hands. It decodes gestures by looking for high velocity downward hand movements. The gestures are mapped to notes and chords based on X position. There is much de-bouncing and tweaking to get results.

Here’s a demonstration which is somewhat painful to listen to, but gets the point across.

local files:

tkzic/max teaching examples/