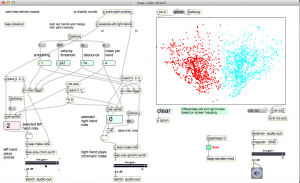

Actually in this context, the word ‘piano’ is way too generous. This is a prototype from October, that uses screen mapping to separate left and right hands. It decodes gestures by looking for high velocity downward hand movements. The gestures are mapped to notes and chords based on X position. There is much de-bouncing and tweaking to get results.

Here’s a demonstration which is somewhat painful to listen to, but gets the point across.

local files:

tkzic/max teaching examples/

- leap-scale-draw5.maxpat

- leap-sender.maxpat