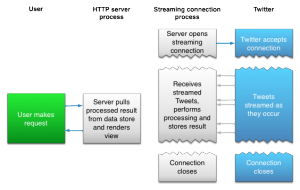

from https://dev.twitter.com/docs/streaming-apis

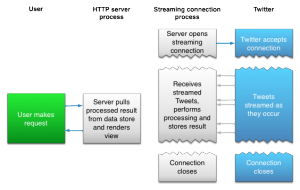

This diagram shows the process of handling a stream, using a data store as an intermediary.

The JSON response breaks out various components of the tweet like hashtags and URL’s but it doesn’t provide a clean version of the text – which for example could be converted to speech.

Here’s a sample response which shows all the fields:

{

"created_at": "Sat Feb 23 01:30:55 +0000 2013",

"id": 305127564538691584,

"id_str": "305127564538691584",

"text": "Window Seat #photo #cats http:\/\/t.co\/sf0fHWEX2S",

"source": "\u003ca href=\"http:\/\/www.echofon.com\/\" rel=\"nofollow\"\u003eEchofon\u003c\/a\u003e",

"truncated": false,

"in_reply_to_status_id": null,

"in_reply_to_status_id_str": null,

"in_reply_to_user_id": null,

"in_reply_to_user_id_str": null,

"in_reply_to_screen_name": null,

"user": {

"id": 19079184,

"id_str": "19079184",

"name": "theRobot Vegetable",

"screen_name": "roveg",

"location": "",

"url": "http:\/\/south-fork.org\/",

"description": "choose art, not life",

"protected": false,

"followers_count": 975,

"friends_count": 454,

"listed_count": 109,

"created_at": "Fri Jan 16 18:38:11 +0000 2009",

"favourites_count": 1018,

"utc_offset": -39600,

"time_zone": "International Date Line West",

"geo_enabled": false,

"verified": false,

"statuses_count": 10888,

"lang": "en",

"contributors_enabled": false,

"is_translator": false,

"profile_background_color": "1A1B1F",

"profile_background_image_url": "http:\/\/a0.twimg.com\/profile_background_images\/6824826\/BlogisattvasEtc.gif",

"profile_background_image_url_https": "https:\/\/si0.twimg.com\/profile_background_images\/6824826\/BlogisattvasEtc.gif",

"profile_background_tile": true,

"profile_image_url": "http:\/\/a0.twimg.com\/profile_images\/266371487\/1roveggreen_normal.gif",

"profile_image_url_https": "https:\/\/si0.twimg.com\/profile_images\/266371487\/1roveggreen_normal.gif",

"profile_link_color": "2FC2EF",

"profile_sidebar_border_color": "181A1E",

"profile_sidebar_fill_color": "252429",

"profile_text_color": "666666",

"profile_use_background_image": false,

"default_profile": false,

"default_profile_image": false,

"following": null,

"follow_request_sent": null,

"notifications": null

},

"geo": null,

"coordinates": null,

"place": null,

"contributors": null,

"retweet_count": 0,

"entities": {

"hashtags": [{

"text": "photo",

"indices": [12, 18]

}, {

"text": "cats",

"indices": [19, 24]

}],

"urls": [{

"url": "http:\/\/t.co\/sf0fHWEX2S",

"expanded_url": "http:\/\/middle-fork.org\/?p=186",

"display_url": "middle-fork.org\/?p=186",

"indices": [25, 47]

}],

"user_mentions": []

},

"favorited": false,

"retweeted": false,

"possibly_sensitive": false,

"filter_level": "medium"

}