The Echonest API provides sample level audio analysis.

http://developer.echonest.com/docs/v4/_static/AnalyzeDocumentation.pdf

What if you used that data to reconstruct music by driving a sequencer in Max? The analysis is a series of time based quanta called segments. Each segment provides information about timing, timbre, and pitch – roughly corresponding to rhythm, harmony, and melody.

download

https://github.com/tkzic/internet-sensors

folder: echo-nest

files

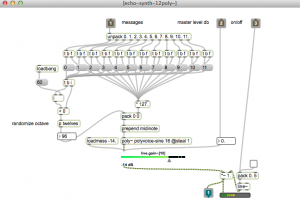

main Max patch

- echonest-synth4.maxpat

abstractions and other files

- polyvoice-sine.maxpat

- polyvoice2.maxpat

ruby server

- echonest-synth2.rb

authentication

You will need to sign up for a developer account at The Echo Nest, and get an API key. https://developer.echonest.com

Edit the ruby server file: echonest-synth2.rb replacing the API with your new API from echonest

installing ruby gems

Install the following ruby gems (from the terminal):

gem install patron

gem install osc-ruby

gem install json

gem install uri

instructions

1. In Terminal run the ruby server:

./echonest-synth2.rb

2. Open the Max patch: echonest-synth4.maxpat and turn on the audio.

3. Enter an Artist and Song title for analysis, in the text boxes. Then press the greet buttons for title and artist. Then press the /analyze button. If it works you will get prompts from the terminal window, the Max window, and you should see the time in seconds in upper right corner of the patch.

If there are problems with the analysis, its most likely due to one of the following:

- artist or title spelled incorrectly

- song is not available

- song is too long

- API is busy

3. Press one of the preset buttons to turn on the tracks.

4. Now you can play the track by pressing the /play button.

The Mixer channels from Left to right are:

- bass

- synth (left)

- synth (right)

- random octave synth

- timbre synth

- master volume

- gain trim

- HPF cutoff frequency

programming notes

Best results happen with slow abstract material, like the Miles (Wayne Shorter) piece above. The bass is not really happening. Lines all sound pretty much the same. I’m thinking it might be possible to derive a bass line from the pitch data by doing a chordal analysis of the analysis.

Here are screenshots of the Max sub-patches (the main screen is in the video above)

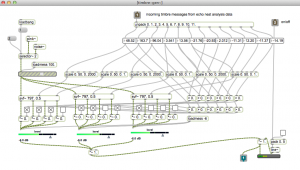

Timbre (percussion synth) – plays filtered noise:

Random octave synth:

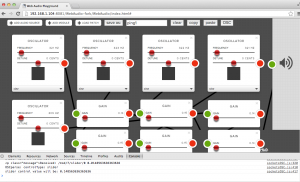

Here’s a Coltrane piece, using roughly the same configuration but with sine oscillators for everything:

There are issues with clicks on the envelopes and the patch is kind of a mess but it plays!

Several modules respond to the API data:

- tone synthesiszer (pitch data)

- harmonic (random octave) synthesizer (pitch data)

- filtered noise (timbre data)

- bass synthesizer (key and mode data)

- envelope generator (loudness data)

Since the key/mode data is global for the track, bass notes are probable guesses. This method doesn’t work for material with strong root motion or a variety of harmonic content. Its essentially the same approach I use when asked to play bass at an open mic night.

The envelopes click at times – it may be due to the relaxed method of timing, i.e.., none at all. If they don’t go away when timing is corrected, this might get cleaned up by adding a few milliseconds to the release time – or looking ahead to make sure the edges of segments are lining up.

[update] Using the Max [poly~] object cleared up the clicking and distortion issues.

Timbre data drives a random noise filter machine. I just patched something together and it sounded responsive – but its kind of hissy – an LPF might make it less interesting.

Haven’t used any of the beat, tatum, or section data yet. The section data should be useful for quashing monotony.

another update – 4/2013

tried to write this into a Max4Live device – so that the pitch data would be played my a Midi (software) instrument. No go. The velocity data gets interpreted in mysterious ways – plus each instrument has its own envelope which interferes with the segment envelopes. Need to think this through. One idea would be to write a device which uses EN analysis data for beats to set warp markers in Live. It would be an amazing auto-warp function for any song. Analysis wars: Berlin vs. Somerville.