Analog warmth

The Sound Of Tubes, Tape & Transformers.

By Hugh Robjohns at Sound On Sound

http://www.soundonsound.com/sos/feb10/articles/analoguewarmth.htm

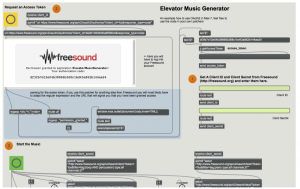

Elevator music generator

By Stefan Brunner at Cycling 74

https://cycling74.com/2014/12/19/music-hack-for-max-7-elevator-music-generator/

Vocaloid tutorial

by soundwavescience

New musical instruments

A presentation for Berklee BTOT 2015 http://www.berklee.edu/faculty

Around the year 1700, several startup ventures developed prototypes of machines with thousands of moving parts. After 30 years of engineering, competition, and refinement, the result was a device remarkably similar to the modern piano.

What are the musical instruments of the future being designed right now?

- new composition tools,

- reactive music,

- connecting things,

- sensors,

- voices,

- brains

Notes:

predictions?

Ray Kurzweil’s future predictions on a timeline: http://imgur.com/quKXllo (The Singularity will happen in 2045)

In 1965 researcher Herbert Simon said: “Machines will be capable, within twenty years, of doing any work a man can do”. Marvin Minsky added his own prediction: “Within a generation … the problem of creating ‘artificial intelligence’ will substantially be solved.” https://forums.opensuse.org/showthread.php/390217-Will-computers-or-machines-ever-become-self-aware-or-evolve/page2

Patterns

Are there patterns in the ways that artists adapt technology?

For example, the Hammond organ borrowed ideas developed for radios. Recorded music is produced with computers that were originally as business machines.

Instead of looking forward to predict future music, lets look backwards to ask,”What technology needs to happen to make musical instruments possible?” The piano relies upon a single-escapement (1710) and later a double-escapement (1821). Real time pitch shifting depends on Fourier transforms (1822) and fast computers (~1980).

Artists often find new (unintended) uses for tools. Like the printing press.

New pianos

The piano is still in development. In December 2014, Eren Başbuğ composed and performed music on the Roli Seaboard – a piano keyboard made of 3 dimensional sensing foam:

Here is Keith McMillen’s QuNexus keyboard (with Polyphonic aftertouch):

https://www.youtube.com/watch?v=bry_62fVB1E

Experiments

Here are tools that might lead to new ways of making music. They won’t replace old ways. Singing has outlasted every other kind of music.

These ideas represent a combination of engineering and art. Engineers need artists. Artists need engineers. Interesting things happen at the confluence of streams.

Analysis, re-synthesis, transformation

Computers can analyze the audio spectrum in real time. Sounds can be transformed and re-synthesized with near zero latency.

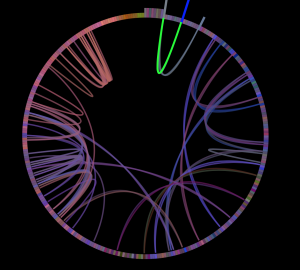

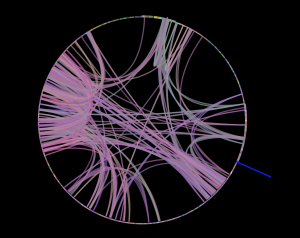

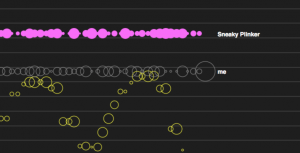

Infinite Jukebox

Finding alternate routes through a song.

by Paul Lamere at the Echonest

Echonest has compiled data on over 14 million songs. This is an example of machine learning and pattern matching applied to music.

http://labs.echonest.com/Uploader/index.html

Try examples: “Karma Police”, Or search for: “Albert Ayler”)

- Analyze your own music: https://reactivemusic.net/?p=18026

Remixing a remix

“Mindblowing Six Song Country Mashup”: https://www.youtube.com/watch?v=FY8SwIvxj8o (start at 0:40)

Local file: Max teaching examples/new-country-mashup.mp3

More about Echonest

- Music Machinery by Paul Lamere: http://musicmachinery.com

- Echonest segment analysis player: https://reactivemusic.net/?p=6296

Feature detection

Looking at music under a microscope.

removing music from speech

First you have to separate them.

SMS-tools

by Xavier Serra and UPF

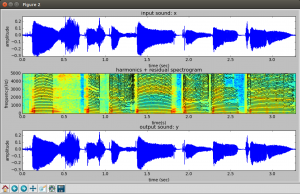

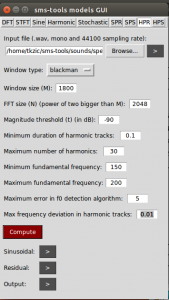

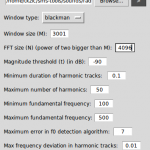

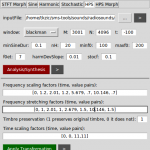

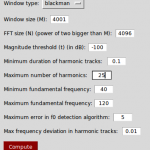

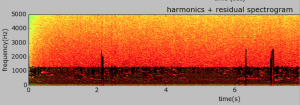

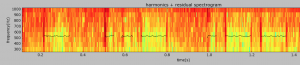

Harmonic Model Plus Residual (HPR) – Build a spectrogram using STFT, then identify where there is strong correlation to a tonal harmonic structure (music). This is the harmonic model of the sound. Subtract it from the original spectrogram to get the residual (noise).

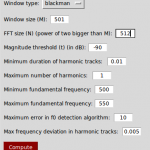

Settings for above example:

- Window size: 1800 (SR / f0 * lobeWidth) 44100 / 200 * 8 = 1764

- FFT size: 2048

- Mag threshold: -90

- Max harmonics: 30

- f0 min: 150

- f0 max: 200

Many kinds of features

- Low level features: harmonicity, amplitude, fundamental frequency

- high level features: mood, genre, danceability

Examples of feature detection

- Acoustic Brainz: https://reactivemusic.net/?p=17641 (typical analysis page)

- Freesound (vast library of sounds): https://www.freesound.org – look at “similar sounds”

- Essentia (open source feature detection tools) https://github.com/MTG/essentia

- “What We Watch” – Ethan Zuckerman https://reactivemusic.net/?p=10987

Music information retrieval

Finding the drop

“Detetcting Drops in EDM” – by Karthik Yadati, Martha Larson, Cynthia C. S. Liem, Alan Hanjalic at Delft University of Technology (2014) https://reactivemusic.net/?p=17711

Polyphonic audio editing

Blurring the distinction between recorded and written music.

Melodyne

by Celemony

http://www.celemony.com/en/start

A minor version of “Bohemian Rhapsody”: http://www.youtube.com/watch?v=voca1OyQdKk

Music recognition

“How Shazam Works” by Farhoud Manjoo at Slate: https://reactivemusic.net/?p=12712, “About 3 datapoints per second, per song.”

- Music fingerprinting: https://musicbrainz.org/doc/Fingerprinting

- Humans being computers. Mystery sounds. (Local file: Desktop/mystery sounds)

- Is it more difficult to build a robot that plays or one that listens?

Sonographic sound processing

Transforming music through pictures.

by Tadej Droljc

https://reactivemusic.net/?p=16887

(Example of 3d speech processing at 4:12)

local file: SSP-dissertation/4 – Max/MSP/Jitter Patch of PV With Spectrogram as a Spectral Data Storage and User Interface/basic_patch.maxpat

Try recording a short passage, then set bound mode to 4, and click autorotate

Spectral scanning in Ableton Live:

http://youtu.be/r-ZpwGgkGFI

Web Audio

Web browser is the new black

Noteflight

by Joe Berkowitz

http://www.noteflight.com/login

Plink

by Dinahmoe

http://labs.dinahmoe.com/plink/

Can you jam over the internet?

What is the speed of electricity? 70-80 ms is the best round trip latency (via fiber) from the U.S. east to west coast. If you were jamming over the internet with someone on the opposite coast it might be like being 100 ft away from them in a field. (sound travels 1100 feet/second in air).

Global communal experiences – Bill McKibben – 1990 “The Age of Missing Information”

More about Web Audio

- A quick Web Audio introduction: https://reactivemusic.net/?p=17600

- Gibber by Charlie Roberts http://gibber.mat.ucsb.edu/

Conversation with robots

Computers finding meaning

The Google speech API

https://reactivemusic.net/?p=9834

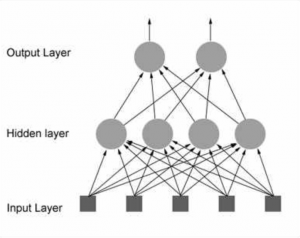

The Google speech API uses neural networks, statistics, and large quantities of data.

Microsoft: real-time translation

- German/English http://digg.com/video/heres-microsoft-demoing-their-breakthrough-in-real-time-translated-conversation

- Skype translator – Spanish/English: http://www.skype.com/en/translator-preview/

Reverse entropy

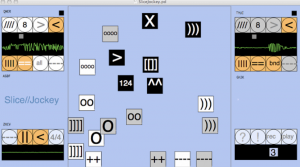

InstantDecomposer

Making music from from sounds that are not music.

by Katja Vetter

. (InstantDecomposer is an update of SliceJockey2): http://www.katjaas.nl/slicejockey/slicejockey.html

- local: InstantDecomposer version: tkzic/pdweekend2014/IDecTouch/IDecTouch.pd

- local: slicejockey2test2/slicejockey2test2.pd

More about reactive music

- RJDJ apps – create personal soundtracks from the environment

- “Lyrebirds” by Christopher Lopez https://www.youtube.com/watch?v=Ouws45R2iXg

Sensors and sonification

Transforming motion into music

Three approaches

- earcons (email notification sound)

- models (video game sounds)

- parameter mapping (Geiger counter)

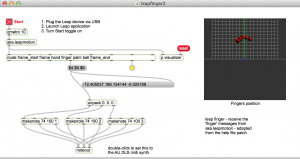

Leap Motion

camera based hand sensor

“Muse” (Boulanger Labs) with Paul Bachelor, Christopher Konopka, Tom Shani, and Chelsea Southard: https://reactivemusic.net/?p=16187

Max/MSP piano example: Leapfinger: https://reactivemusic.net/?p=11727

local file: max-projects/leap-motion/leapfinger2.maxpat

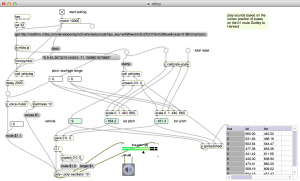

Internet sensors project

Detecting motion from the Internet

https://reactivemusic.net/?p=5859

Twitter streaming example

https://reactivemusic.net/?p=5786

MBTA bus data

Sonification of Mass Ave buses, from Harvard to Dudley

https://reactivemusic.net/?p=17524

Stock market music

https://reactivemusic.net/?p=12029

More sonification projects

Vine API mashup

By Steve Hensley

Using Max/MSP/jitter

local file: tkzic/stevehensely/shensley_maxvine.maxpat

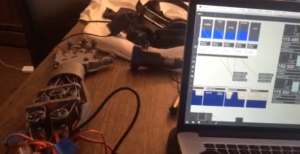

Audio sensing gloves for spacesuits

By Christopher Konopka at future, music, technology

http://futuremusictechnology.com

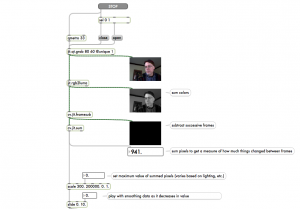

Computer Vision

Sensing motion with video using frame subtraction

by Adam Rokhsar

https://reactivemusic.net/?p=7005

local file: max-projects/frame-subtraction

The brain

Music is stored all across the brain.

Mouse brain wiring diagram

The Allen institute

https://reactivemusic.net/?p=17758

“Hacking the soul” by Christof Koch at the Allen institute

(An Explanation of the wiring diagram of the mouse brain – at 13:33) http://www.technologyreview.com/emtech/14/video/watch/christof-koch-hacking-the-soul/

OpenWorm project

A complete simulation of the nematode worm, in software, with a Lego body (320 neurons)

: https://reactivemusic.net/?p=17744

AARON

Harold Cohen’s algorithmic painting machine

https://reactivemusic.net/?p=17778

Brain plasticity

A perfect pitch pill? http://www.theverge.com/2014/1/6/5279182/valproate-may-give-humans-perfect-pitch-by-resetting-critical-periods-in-brain

DNA

Could we grow music producing organisms? https://reactivemusic.net/?p=18018

Two possibilities

Rejecting technology?

An optimistic future?

There is a quickening of discovery: internet collaboration, open source, linux, github, r-pi, Pd, SDR.

“Robots and AI will help us create more jobs for humans — if we want them. And one of those jobs for us will be to keep inventing new jobs for the AIs and robots to take from us. We think of a new job we want, we do it for a while, then we teach robots how to do it. Then we make up something else.”

“…We invented machines to take x-rays, then we invented x-ray diagnostic technicians which farmers 200 years ago would have not believed could be a job, and now we are giving those jobs to robot AIs.”

Kevin Kelly – January 7, 2015, reddit AMA http://www.reddit.com/r/Futurology/comments/2rohmk/i_am_kevin_kelly_radical_technooptimist_digital/

Will people be marrying robots in 2050? http://www.livescience.com/1951-forecast-sex-marriage-robots-2050.html

“What can you predict about the future of music” by Michael Gonchar at The New York Times https://reactivemusic.net/?p=17023

Jim Morrison predicts the future of music:

More areas to explore

- NIME (New interfaces for musical expression) http://en.wikipedia.org/wiki/New_Interfaces_for_Musical_Expression

- Immersive virtual musical instruments http://en.wikipedia.org/wiki/Immersive_virtual_musical_instrument

- I’m thinking of something: http://imthinkingofsomething.com

Hearing voices

A presentation for Berklee BTOT 2015 http://www.berklee.edu/faculty

(KITT dashboard by Dave Metlesits)

The voice was the first musical instrument. Humans are not the only source of musical voices. Machines have voices. Animals too.

Topics

- synthesizing voices (formant synthesis, text to speech, Vocaloid)

- processing voices (pitch-shifting, time-stretching, vocoding, filtering, harmonizing),

- voices of the natural world

- fictional languages and animals

- accents

- speech and music recognition

- processing voices as pictures

- removing music from speech

- removing voices

Voices

We instantly recognize people and animals by their voices. As an artist we work to develop our own voice. Voices contain information beyond words. Think of R2D2 or Chewbacca.

There is also information between words: “Palin Biden Silences” David Tinapple, 2008: http://vimeo.com/38876967

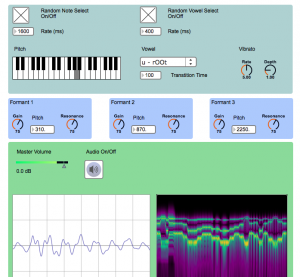

Synthesizing voices

The vocal spectrum

What’s in a voice?

- Formant synthesis in Max by Mark Durham: https://reactivemusic.net/?p=9294 (singing vowels with formants)

- Formant synthesis Tutorial by Jordan Smith: https://reactivemusic.net/?p=9290 (making consonants with noise)

Singing chords

Humans acting like synthesizers.

- Singing chords: Lalah Hathaway https://www.youtube.com/watch?v=c5AdOZtRdfE (0:30)

- Tuvan throat singing: https://www.youtube.com/watch?v=5wHbIWH_NGc (near the end of the video)

- Polyphonic overtone singing: Anna-Maria Hefele https://www.youtube.com/watch?v=vC9Qh709gas

More about formants

- Formants (Wikipedia) http://en.wikipedia.org/wiki/Formant

- Rooms have resonances: “I am sitting in a Room” by Alvin Lucier

- Singer’s formant (2800-3400Hz).

Text to speech

Teaching machines to talk.

- phonemes (unit of sound)

- diphones (combination of phonemes) (Mac OS “Macintalk 3 pro”)

- morphemes (unit of meaning)

- prosody (musical quality of speech)

Methods

- articulatory (anatomical model)

- formant (additive synthesis) (speak and spell)

- concatentative (building blocks) (Mac Os)

Try the ‘say’ command (in Mac OS terminal), for example: say hello

More about text to speech

- History of speech synthesis http://research.spa.aalto.fi/publications/theses/lemmetty_mst/chap5.html (Helsinki University of Technology 1999)

- Speech synthesizers, 2014 https://reactivemusic.net/?p=18141

- Speech synthesis web API https://reactivemusic.net/?p=18138

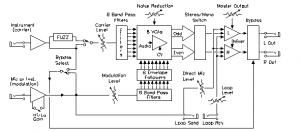

Vocoders

Combining the energy of voice with musical instruments (convolution)

- Peter Frampton “talkbox”: https://www.youtube.com/watch?v=EqYDQPN_nXQ (about 5:42) – Where is the exciting audience noise in this video?

- Ableton Live example: Local file: Max/MSP: examples/effects/classic-vocoder-folder/classic_vocoder.maxpat

- Max vocoder tutorial (In the frequency domain), by dude837 – Sam Tarakajian https://reactivemusic.net/?p=17362 (local file: dude837/4-vocoder/robot-master.maxpat

More about vocoders

- How vocoders work, by Craig Anderton: https://reactivemusic.net/?p=17218

- Wikipedia: http://en.wikipedia.org/wiki/Vocoder. Engineers conserving information to reduce bandwith

- Heterodyne filter: https://reactivemusic.net/?p=17338 – digital emulation of an analog filter bank.

- Max/MSP: examples/effects/classic-vocoder-folder/classic_vocoder.maxpat

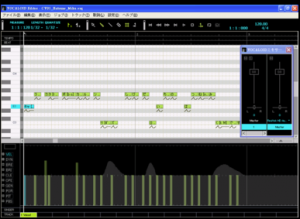

Vocaloid

By Yamaha

(text + notation = singing)

- Vocaloid website: http://www.vocaloid.com/en/

- Hatsune Miku: https://reactivemusic.net/?p=6891

Demo tracks: https://www.youtube.com/watch?v=QWkHypp3kuQ

- Vocaloid tutorial

- #1 https://www.youtube.com/watch?v=vcJDTDBWTrw (entering notes and lyrics – 1:25)

- #2 https://www.youtube.com/watch?v=qpGwgIyMGOk (raw sound – 0:42)

- #5 https://www.youtube.com/watch?v=YEAuL6Q2j-0 (with phrasing, vibrato, etc.,- 1:00)

Vocaloop device http://vocaloop.jp/ demo: https://www.youtube.com/watch?v=xLpX2M7I6og#t=24

Processing voices

Transformation

Pitch transposing a baby https://reactivemusic.net/?p=2458

Real time pitch shifting

Autotune: “T-Pain effect” ,(I-am-T-Pain bySmule), “Lollipop” by Lil’ Wayne. “Woods” by Bon Iver https://www.youtube.com/watch?v=1_cePGP6lbU

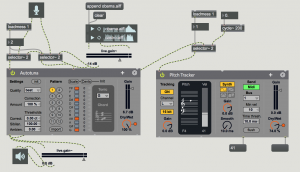

Autotuna in Max 7

by Matthew Davidson

Local file: max-teaching-examples/autotuna-test.maxpat

InstantDecomposer in Pure Data (Pd)

by Katja Vetter

http://www.katjaas.nl/slicejockey/slicejockey.html

Autocorrelation: (helmholtz~ Pd external) “Helmholtz finds the pitch” http://www.katjaas.nl/helmholtz/helmholtz.html

(^^ is input pitch, preset #9 is normal)

- local file: InstantDecomposer version: tkzic/pdweekend2014/IDecTouch/IDecTouch.pd

- local file: slicejockey2test2/slicejockey2test2.pd

Phasors and Granular synthesis

Disassembling time into very small pieces

- sorting noise; http://youtu.be/kPRA0W1kECg

- Phasors: https://reactivemusic.net/?p=17353

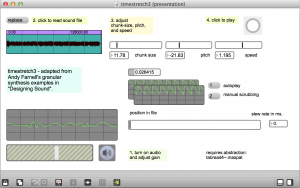

Time-stretching

Adapted from Andy Farnell, “Designing Sound”

https://reactivemusic.net/?p=11385 Download these patches from: https://github.com/tkzic/max-projects folder: granular-timestretch

- Basic granular synthesis: graintest3.maxpat

- Time-stretching: timestretch5.maxpat

More about phasors and granular synthesis

- Shepard tone upward glissando by Chris Dobrian: https://reactivemusic.net/?p=17255

- “Falling Falling” (Visual Shepard tone) https://reactivemusic.net/?p=17251

- Ableton Live – granulator (Robert Henke)

Phase vocoder

…coming soon

Sonographic sound processing

Changing sound into pictures and back into sound

by Tadej Droljc

https://reactivemusic.net/?p=16887

(Example of 3d speech processing at 4:12)

local file: SSP-dissertation/4 – Max/MSP/Jitter Patch of PV With Spectrogram as a Spectral Data Storage and User Interface/basic_patch.maxpat

Try recording a short passage, then set bound mode to 4, and click autorotate

Speech to text

Understanding the meaning of speech

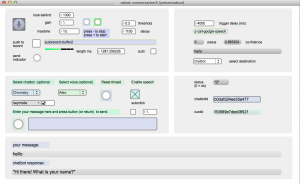

The Google Speech API

A conversation with a robot in Max

https://reactivemusic.net/?p=9834

Google speech uses neural networks, statistics, and large quantities of data.

More about speech to text

- Real time German/English translator (Microsoft) http://digg.com/video/heres-microsoft-demoing-their-breakthrough-in-real-time-translated-conversation

- Skype translator – Spanish/English: http://www.skype.com/en/translator-preview/

- Dragon Naturally Speaking (Nuance) accidentally converts music to poetry

Voices of the natural world

Changes in the environment reflected by sound

- Bernie Krause: “Soundscapes”

Fictional languages and animals

“You can talk to the animals…”

- Derek Abbot’s animal noise page: http://www.eleceng.adelaide.edu.au/Personal/dabbott/animal.html

- Quack project http://www.quack-project.com/table.cgi

- Fictional language dialog by Naila Burney: https://reactivemusic.net/?p=7242

Pig creatures example: http://vimeo.com/64543087

- 0:00 Neutral

- 0:32 Single morphemes – neutral mode

- 0:37 Series, with unifying sounds and breaths

- 1:02 Neutral, layered

- 1:12 Sad

- 1:26 Angry

- 1:44 More Angry

- 2:11 Happy

What about Jar Jar Binks?

Accents

The sound changes but the words remain the same.

The Speech accent archive https://reactivemusic.net/?p=9436

Finding and removing music in speech

We are always singing.

Jamming with speech

- Drummer jams with a speed-talking auctioneer: https://reactivemusic.net/?p=7140

- Guitarist imitates crying politician: http://digg.com/video/guitarist-plays-along-to-sobbing-japanese-politician

Removing music from speech

SMS-tools

by Xavier Serra and UPF

Harmonic Model Plus Residual (HPR) – Build a spectrogram using STFT, then identify where there is strong correlation to a tonal harmonic structure (music). This is the harmonic model of the sound. Subtract it from the original spectrogram to get the residual (noise).

Settings for above example:

- Window size: 1800 (SR / f0 * lobeWidth) 44100 / 200 * 8 = 1764

- FFT size: 2048

- Mag threshold: -90

- Max harmonics: 30

- f0 min: 150

- f0 max: 200

feature detection

- time dependent

- Low level features: harmonicity, amplitude, fundamental frequency

- high level features: mood, genre, danceability

Acoustic Brainz: (typical analysis page) https://reactivemusic.net/?p=17641

Essentia (open source feature detection tools) https://github.com/MTG/essentia

Freesound (vast library of sounds): https://www.freesound.org – look at “similar sounds”

Removing voices from music

A sad thought

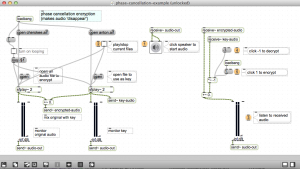

phase cancellation encryption

This method was used to send secret messages during world war 2. Its now used in cell phones to get rid of echo. Its also used in noise canceling headphones.

https://reactivemusic.net/?p=8879

max-projects/phase-cancellation/phase-cancellation-example.maxpat

Center channel subtraction

What is not left and not right?

Ableton Live – utility/difference device: https://reactivemusic.net/?p=1498 (Allison Krause example)

Local file: Ableton-teaching-examples/vocal-eliminator

More experiments

- Synthesizing laughter

- Bobby McFerrin: (pentatonic scale) http://www.ted.com/talks/bobby_mcferrin_hacks_your_brain_with_music.html

- Alphabet vocals

- jii lighter https://reactivemusic.net/?p=6970

- Sesame St – Joan LaBarbara: http://www.youtube.com/watch?v=y819U6jBDog

- Warping acapella tracks https://reactivemusic.net/?p=18046

Questions

- Why do most people not like the recorded sound of their voice?

- Can voice be used as a controller?

- (Imitone: http://imitone.com)

- Mari Kimura

- How do you recognize voices?

- Does speech recognition work with singing?

- How does the Google Speech API know the difference between music and speech?

- How can we listen to ultrasonic animal sounds?

- What about animal translators?

Processing shortwave radio sounds

Using the python sms-tools library.

sms-tools: https://github.com/MTG/sms-tools

Here is a song made from the processed sounds:

mp3 version:

This project was an assignment for the Coursera “Audio Signal Processing for Music Applications” course. https://www.coursera.org/course/audio

Source material

Sounds were recorded from a shortwave radio between 5-10MHz.

freesound.org links to the sounds:

- am_interference_7mhz: https://www.freesound.org/people/tkzic/sounds/258095/

- buzz_pulse_7mhz: https://www.freesound.org/people/tkzic/sounds/258096/

- digital_pulse_7mhz: https://www.freesound.org/people/tkzic/sounds/258098/

- cw_7mhz1_small: https://www.freesound.org/people/tkzic/sounds/258097/

- wwv_5mhz_short: https://www.freesound.org/people/tkzic/sounds/258099/

Processing

am_interference_7mhz.wav

The sound is an AM shortwave broadcast station from between 7-8 MHz. It is speech with atmospheric noise and a digitally modulated carrier at 440Hz in the background.

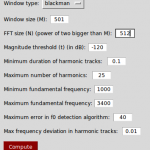

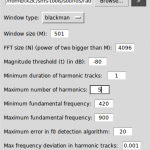

I tried various approaches to removing the speech and isolating the carrier. But ended up using the following parameters to remove noise and speech, but for most part leaving a 440hz digital mode signal with large gaps in it.

- M=701

- N=1024

- minf0=400

- maxf0=500

- thresh=-90

- max harmonics=50

After more experimentation, the following changes resulted in a cool continuous tone with speechlike quality (but not intelligible) and the background noise is gone.

Here is the full list of parameters:

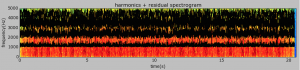

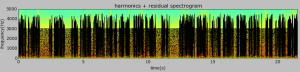

Here is a plot:

Here is the resulting sound of the sinusoidal part of the harmonic model:

buzz_pulse_7mhz.wav

The sound is continuous digital modulation (buzzing) from a shortwave radio between 7-8 MHz. The buzz is around 100Hz with atmospheric background noise.

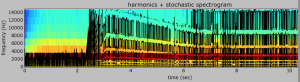

Transformation using HPS (harmonic plus stochastic) model.

Not very impressive analysis, but the resynthesis had a very cool looking spectrogram due to some frequency shifting.

I realized that I had set f0min too high. Went back to using the HPR model without transformation to see if I could separate the tone. Here is the plot:

Here are the resulting sounds transformation (unused) and the sinusoidal/residual results that were used in the track.

source: digital_pulse_7hz.wav

A repeating pulse around from a shortwave radio between 7-8 MHz. The frequency of the pulse is around 1000Hz with a noise component.

Another noise filter – this was way more difficult due to high freq material.

Instead, I went with a downward pitch transform, using the HPS model transform. Here are the resulting sounds from the HPR filter (unused) and the HPS transform.

cw_7mhz1_small.wav

The sound contains typical amateur radio CW signals from the 40 Meter band, with several interfering signals (QRM) and atmospheric noise (QRN). Using the HPR model, I was able to completely isolate and re-synthesize the CW signal, removing all the noise and interfering signals.

Note that you can actually see the morse code letters “T, U, and W” on the spectrogram of model!

Here is the re-synthesized CW sound:

wwv_5mhz_short.wav

The WWV National Bureau of Standards “clock” station at 5MHz. A combination of pulses, tones, speech, and background noise.

I was trying to separate the voice from the rest of the tones and noise. After several hours and various approaches, I gave up. The signal may be too complex to separate using these models. There were some interesting plots with the HPR model

Finally decided to just isolate the 440 Hz. clock pulse from the rest of the signal:

Here is the resulting sound (note that the tone starts several seconds into the sample)

Christmas tree harvesting with helicopter

By The Northwest Report

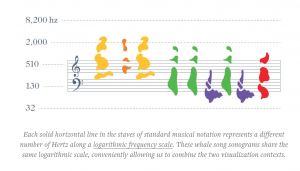

Whale song explained

Synchronization across oceans.

By David Rothenberg and Mike Deal at medium.com