ml4a:

by Gene Kogan

Actually Duplo.

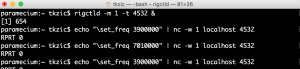

update: 1/25/2021 – Using this general setup with Airspy, CubicSDR, rigctld, and netcat (nc) to send IQ data into the basicSDR3.maxpat patch

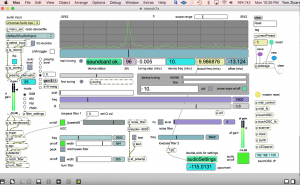

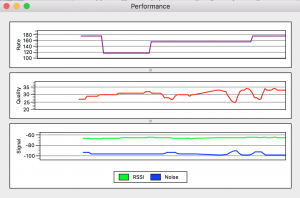

CubicSDR uses the SoapySDR library as generic tool for extracting realtime IQ data streams from common SDR devices. It also provides TCP external frequency control using HAMLIB.

Although its not the main purpose of CubicSDR, the IQ streaming capability will connect SDR devices to Max, Pd, and other DSP platforms, to build experimental radios. All without building external objects or hardware device drivers. The convenience of using CubicSDR for this purpose far outweighs the overhead.

How to use CubicSDR as a front-end for SDR experiments in Max.

The signal path for this test is:

Running in the other direction, the frequency control path is:

There’s a lot of stuff going on here, so the choice to use hardware audio routing instead of Soundflower and netcat instead of TCP in Max, is an effort toward simplicity.

echo ‘F 7010000’ | nc -w 1 localhost 4532

For this test, you can use any of the MaxSDR tutorials available at https://github.com/tkzic/maxradio but I chose to use the main program, currently maxsdr7a.maxpat. The key is to choose the default audio input device and set it to be the same as what is coming out of CubicSDR. I used a stereo patch cord to connect the line output of my Apollo Twin interface to the input jacks – but you can also use Soundflower.

Installing Hamlib: https://reactivemusic.net/?p=19402

Installing CubicSDR: https://github.com/cjcliffe/CubicSDR/releases

Supported SDR devices: https://reactivemusic.net/?p=19746

I had some success using the Max TCP external described at the Installing Hamlib link above, but temporarily abandoned it due to some latency and dropouts.

Local version of this patch is: tcpClient-small2.maxpat

by Felix Turner

https://www.airtightinteractive.com/2013/10/making-audio-reactive-visuals/

By Christopher Konopka

In the past year, Chris has published nearly 2500 improvised video pieces.

You may be familiar with analog modular audio synthesis. The hardware to produce video looks nearly identical – a maze of patch cords and dials.

Analog video is television. A CRT (cathode ray tube) resynthesizes video information by demodulating signals from a camera. Vintage televisions have dials to adjust color and vertical sync. When you turn the dials you are synthesizing analog video. Distortion, filtering, and feedback – either at the source (camera) or the destination (tv screen) – offer up an infinite variety of images.

Today all media is digital. Like the screen you are looking at. The difference with analog is in how it’s produced. Boundaries are less definite. Lines curve. Colors waver. Feedback looks like flames. Every frame is a painting.

https://vimeo.com/172035463

Images can be generated electronically using modules – without a camera.

Like with audio sampling, anything is a source. Movies, Youtube, live television, even Felix the Cat.

https://vimeopro.com/cskonopka/analogvideo-december-2015/video/153312961

When you aim a guitar at an amplifier it screams. Tilt it away slightly and the screaming subsides. In between there’s sweet spot. The same is true with cameras and screens. Feedback results when output is mixed with input.

https://vimeopro.com/cskonopka/analogvideo-december-2015/video/153306760

Analog shortwave radio signals are distorted by the atmosphere in a manner similar to video filtering.

A studio in Bethel, Maine.

An improvised collaboration between Chris and Tom Zicarelli using shortwave radio processed with audio effects.

https://www.instagram.com/p/BImQwOGBveV/?taken-by=cskonopka

A recent screen test at the Gem Theatre in Bethel, Maine. Source material is a time lapse film of a glacier installation – produced at the same theatre – by Wade Kavanaugh and Steven Nguyen. https://www.youtube.com/watch?v=6c36Y-Dcj30 The film was re-synthesized using analog video and feedback. Soundtrack by Tom Zicarelli.

https://www.instagram.com/p/BImRSzHBOLL/?taken-by=cskonopka

Big screen equals mind bending experience.

Note: previous clip excerpted from this 15 minute jam: https://vimeo.com/177843310

The patterns in this clip appear to be three dimensional. They are not.

From a show that happened somewhere in the known universe:

Improvised analog video with the band “Alto”. Patterns reminiscent of magical textiles.

By Martin Treiber

More information from Apple: https://support.apple.com/en-us/HT202663

“The superformula is a generalization of the superellipse and was first proposed by Johan Gielis in 2003″

from Wikipedia

http://mysterydate.github.io/superFormulaGenerator/

Some superformula samples

By Cade Metz at wired.com

http://www.wired.com/2015/11/google-open-sources-its-artificial-intelligence-engine/?mbid=social_fb