notes

Today I’m attempting to update audiograph to run under the current iOS/xcode releases. Here are some helpful solutions to resolving compilation errors and warnings…

(update) Had problems with git, because I forgot to pull the changes from Michael Tyson, down from github before I made changes to the files locally and committed them.

Ended up doing a wholesale copy and a lot of duplicated effort – anyway it seems to work now.

Very important: The current local version of audiograph is in tkzic/coreaudio/audiograph

I have also submitted a new version 1.1 to app store. but its in the same folder I just mentioned.

runtime view controller error:

<code>'A view can only be associated with at most one view controller at a time!</code>

group table view default color warning:

deprecated AvAudioSession methods (iOS 6.0)

I commented these out and added the updated methods

see this link for setDelegate

miscellaneous

see Apple docs for everything else

The ‘play’ button on the bottom toolbar didn’t work on the 5g ipod touch (with taller screen)

Here’s what fixed it (in applicationDidFinishLaunching…)

from this stack overflow post: http://stackoverflow.com/questions/12395200/how-to-develop-or-migrate-apps-for-iphone-5-screen-resolution

|

The only really required thing to do is to add a launch image named “[email protected]” to the app resources, and in general case (if you’re lucky enough) the app will work correctly. In case the app does not handle touch events, then make sure that the key window has the proper size. The workaround is to set the proper frame: <code>[window setFrame:[[UIScreen mainScreen] bounds]]</code> There are other issues not related to screen size when migrating to iOS 6. Read iOS 6.0 Release Notesfor details. |

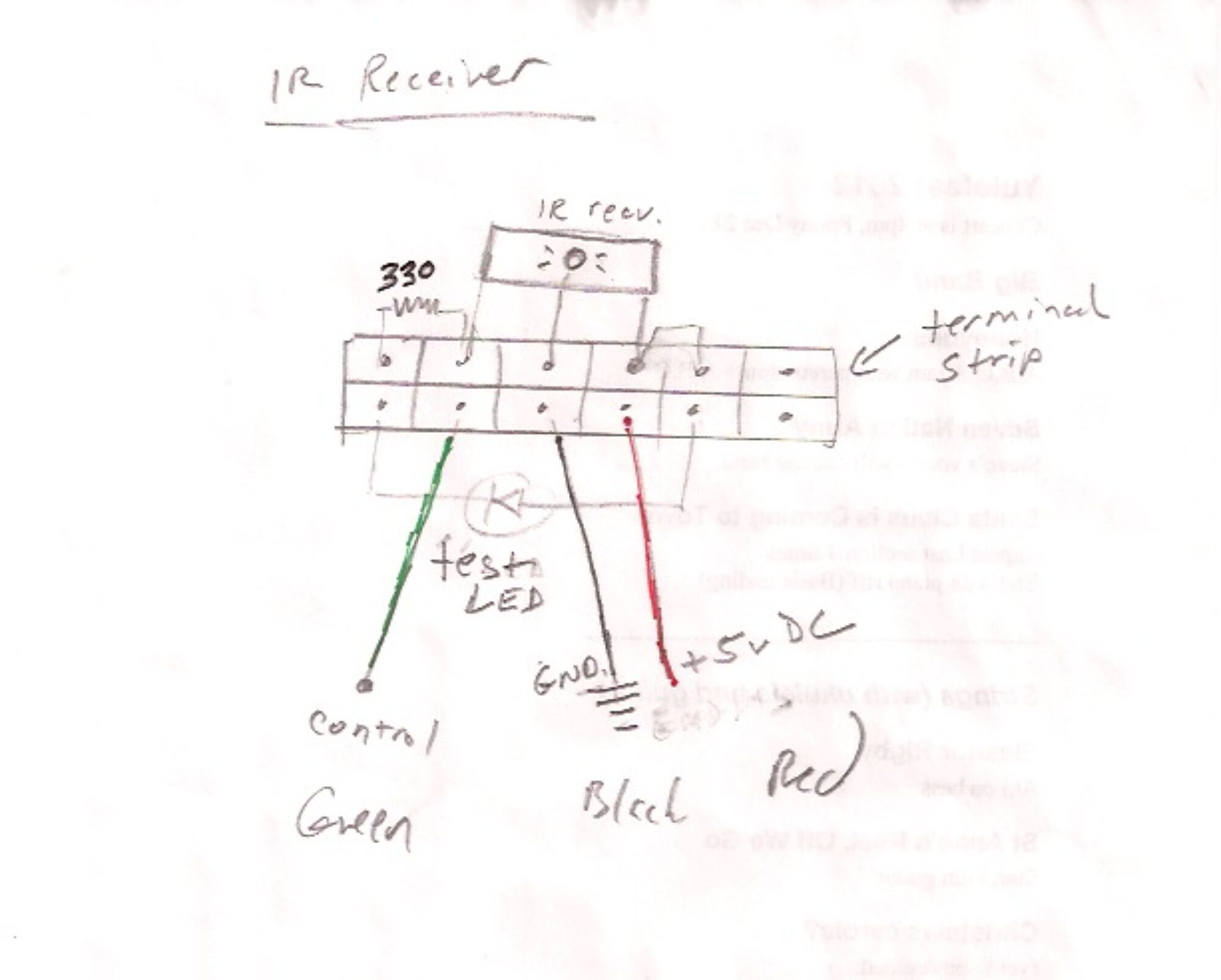

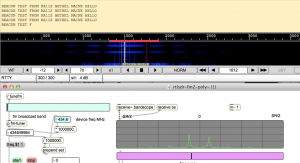

Connected via some resistors to set the level and bias. This means that the low/high digital outputs from the Pi result in 2 slightly different voltages at the NTX2, which then transmits two frequencies approx 600Hz apart.

This won’t work for APRS. For that you need an analog output, or PWM.