frequency domain

transforming signals

- DFT, FFT, STFT

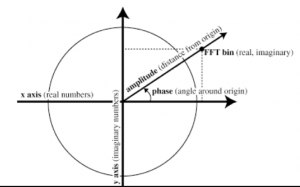

- The FFT produces a stream of complex numbers representing energy at frequencies across the spectrum

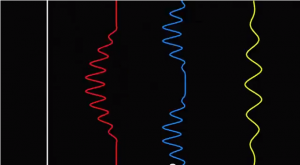

- The length of the FFT determines the frequency resolution (number of bins)

- Increasing length of FFT frame degrades resolution in time domain (rhythmic accuracy)

- Amplitude = root of (r*r) + (i*i) = magnitude

- Phase = arctangent of i/r = angle

- For a sine wave you can derive frequency from phase

- For any signal you can approximate frequency from amplitude and phase values in an FFT frame. See http://www.dspdimension.com/admin/pitch-shifting-using-the-ft/

Practical Applications

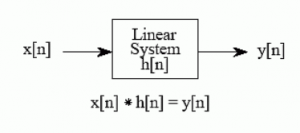

- Convolution/Deconvolution

- Analysis

- Spectral processing (pitch and timbre)

- Amplitude processing: noise gates, crossovers

- phase vocoder

- radio

Examples

- Max/MSP tutorials 25-26

- Max/MSP Example DSP patches (in Extras | ExamplesOverview | MSP | FFT fun

- convolution workshop

- Forbidden planet

- Fourier Filter (Vetter)

- fft-tz2 (basics, SSB ring modulator)

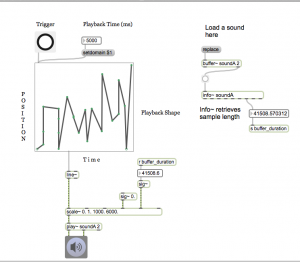

- fplanet-tz.maxpat: hacked version of forbidden-planet example which uses granular indexing to do spectral convolution and make spaceship sounds. To use patch: 1 ) turn on audio, 2) then press message boxes inside the green panel

- fp-fft-tz.maxpat: pfft~ subpatch for above

- fourierfilter (folder) containing fourierfiltertest.maxpat: Katja Vetter’s complex spectral filter example

- Tristan Jehan’s frequency detector object

- Little Tikes piano: https://reactivemusic.net/?p=6993

- Helicopter frame rate video: http://www.youtube.com/watch?v=jQDjJRYmeWg

- Katja Vetter, “Sinusoids, Complex Numbers, and Modulation” http://www.katjaas.nl/home/home.html

- Reference: http://www.dspguide.com ”The Scientist and Engineer’s Guide to DSP”, By Steven Smith – chapters 8 – 9

- DSP Dimension,

- “The Phase Vocoder”, Richard Dudas and Cort Lippe, http://cycling74.com/2006/11/02/the-phase-vocoder-–-part-i/

Assignments

See notes from previous weeks: https://reactivemusic.net/?p=10109